前言

不开心,不想说话……让我们直接进入这段有趣的学习之旅吧,试试两个月,甚至更短的时间内解决掉这门又臭又长的课程。

Bits, Bytes, and Integers

Representation information as bits

Everything is bits

Each bit is 0 or 1

By encoding/interpreting sets of bits in various ways

- Computers determine what to do (instructions)

- … and represent and manipulate numbers, sets, strings, etc…

Why bits ? Electronic Implementation

- Easy to store with bitsable elements

- Reliably transmitted on noisy and inaccurate wires

- Easy to indicate low level/high level

Bit-level manipulations

Representing & Manipulating Sets

Representing Sets

We can use bits to determine whether an element belongs to a set. Say each bit have index, which corresponds to a number from zero. If a bit is set to 1, it means the corresponding index (i.e., the element) is in the set. Otherwise, its not.

In a more mathematical representation way:

- Width bit vector represents subsets subsets of

- if

Sets Operations

&is related to Intersection|is related to Union~is related to Complement^is related to Symmetric difference

Shift Operations

Left Shift: x << y

- Shift bit-vector

xleftypositions- Throw away extra bits on left

- Fill with 0’s on right

Right Shift: x >> y

- Shift bit-vector

xrightypositions- Throw away extra bits on right

- Logical shift

- Fill with 0’s on left

- Arithmetic shift

- Replicate most significant bit on left

Undefined Behaviour

- Shift amount 0

- Shift amount word size

- Left shift a signed value

Integers

Representation: unsigned and signed

Unsigned

Two’s Complement

Invert mappings

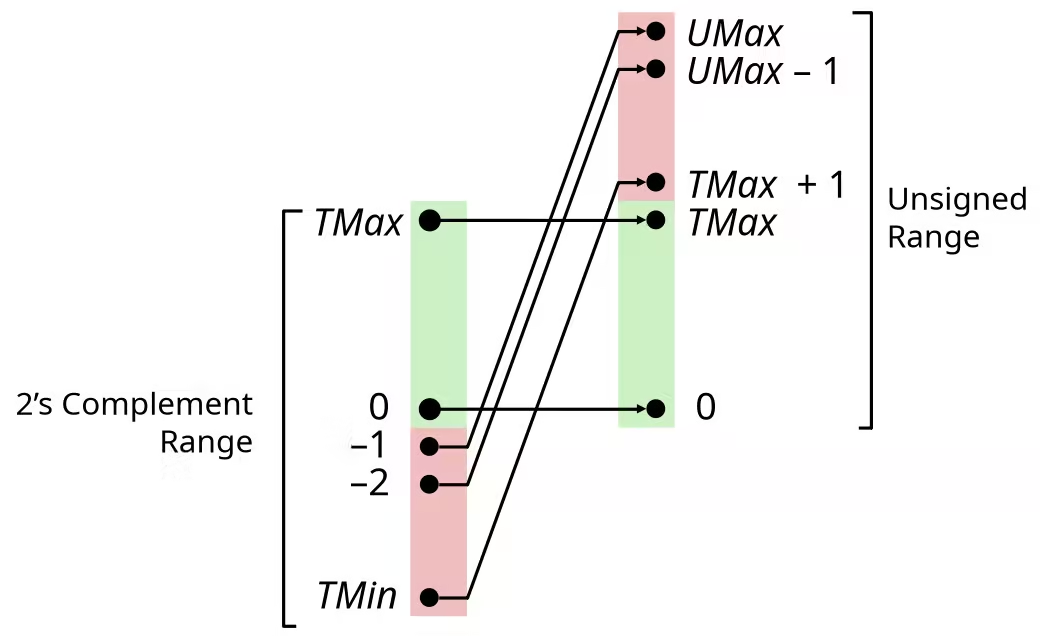

Numeric Ranges

-

Unsigned Values

-

Two’s Complement Values

TIPIn C, these ranges are declared in

limits.h. E.g.,ULONG_MAX,LONG_MAX,LONG_MIN. Values are platform specific.

Observations

-

- Asymmetric range (Every positive value can be represented as a negative value, but cannot be represented as a positive value)

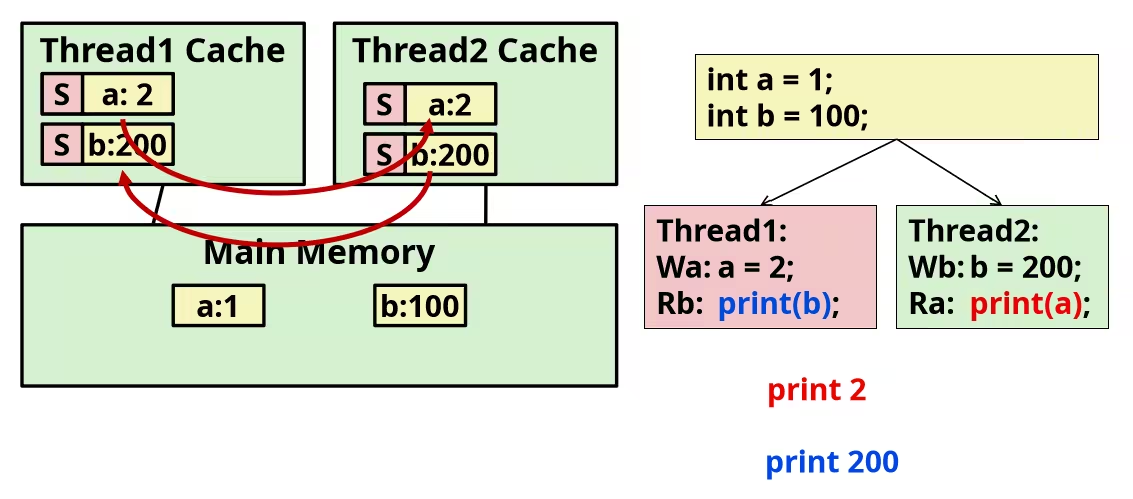

Difference between Unsigned & Signed Numeric Values

The difference between Unsigned & Signed Numeric Values is .

For example, if you want convert a unsigned numeric value to its signed form, just use this value minus , or if you want convert a signed numeric value to its unsigned form, you plus .

Conversion, casting

Mappings between unsigned and two’s complement numbers keep bit representations and reinterpret.

For example, casting a signed value to its unsigned form, the most significant bit from large negative weight becomes to large positive weight and vice versa.

Constants

- By default are considered to be signed integers

- Unsigned if have “U” as suffix

Casting

- Explicit casting between signed & unsigned same as and

- Implicit casting also occurs via assignments and procedure call (assignments will casting to lhs’s type)

Expression Evaluation

- If there is a mix of unsigned and signed in single expression, signed values implicitly cast to unsigned

- Including comparison operations

<,>,==,<=,>

| Constants 1 | Constants 2 | Relation | Evaluation |

|---|---|---|---|

0 | 0U | == | unsigned |

-1 | 0 | < | signed |

-1 | 0U | > | unsigned |

2147483647 | -2147483647-1 | > | signed |

2147483647U | -2147483647-1 | < | unsigned |

-1 | -2 | > | signed |

(unsigned)-1 | -2 | > | unsigned |

2147483647 | 2147483648U | < | unsigned |

2147483647 | (int)2147483648U | > | signed |

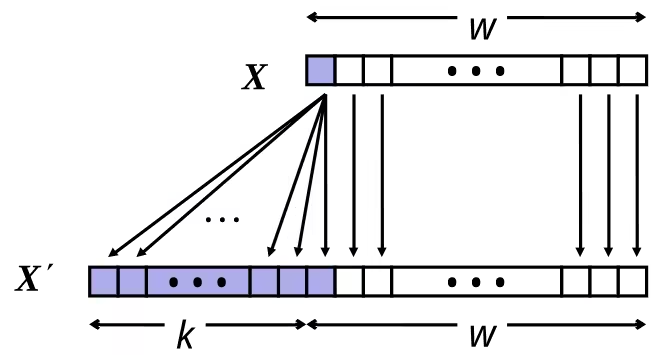

Expanding, truncating

Sign Extension

- Make copies of sign bit

WARNINGConverting from smaller to larger integer data type. C automatically performs sign extension.

Expanding (e.g., short int to int)

- Unsigned: zeros added

- Signed: sign extension

- Both yield expected result

Truncating (e.g., unsigned to unsigned short)

- Unsigned/signed: bits are truncated

- Result reinterpreted

- Unsigned: mod operation

- Signed: similar to mod

- For small numbers yields expected behavior

Addition, negation, multiplication, shifting

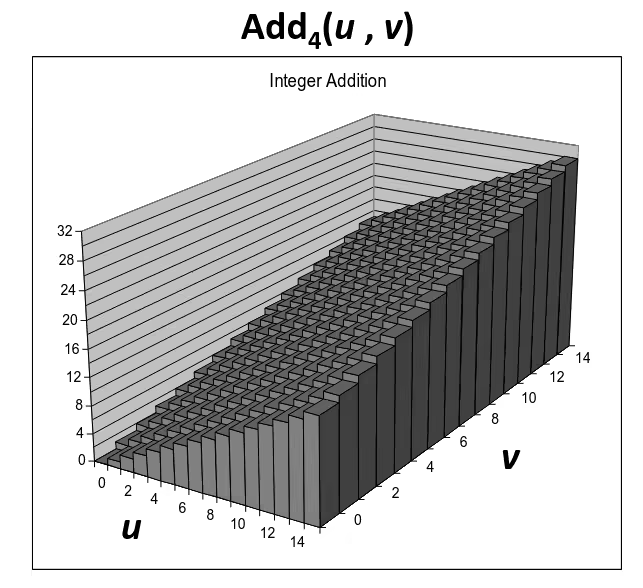

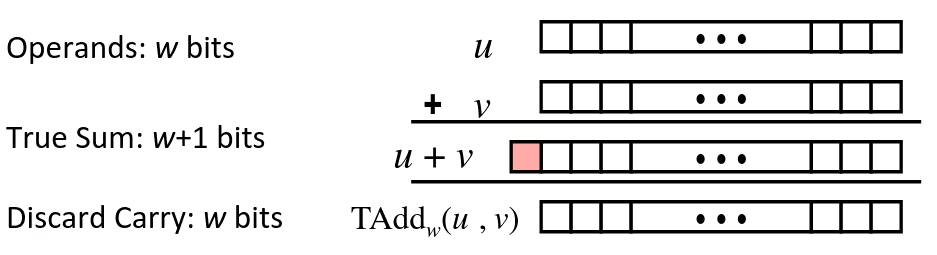

Unsigned Addition

- Standard Addition Function

- Ignore carry output

- Implements Modular Arithmetic

Visualizing (Mathematical) Integer Addition

- Integer Addition

- 4-bit integers

- Compute true sum

- Values increase linearly with and

- Forms planar surface

Visualizing Unsigned Addition

- Wraps Around

- If true sum

- At most once

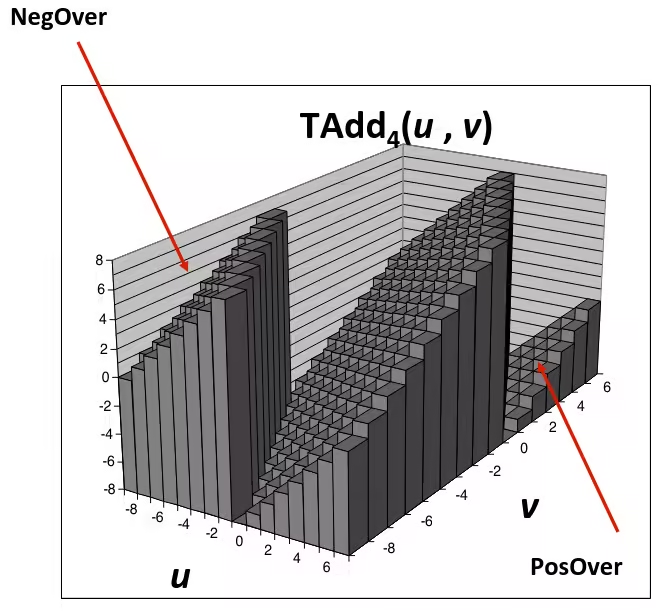

Two’s Complement Addition

- and have Identical Bit-level Behaviour

Signed vs. Unsigned Addition in C:

int s, t, u, v;

s = (int)((unsigned)u + (unsigned)v);t = u + v;

// will give s == tTAdd Overflow

- Functionality

- True sum requires bits

- Drop off MSB

- Treat remaining bits as two’s complement integer

Visualizing Two’s Complement Addition

- Values

- 4-bit two’s comp.

- Range from to

- Wraps Around

- If sum

- Becomes negative

- At most once

- If sum

- Becomes positive

- At most once

- If sum

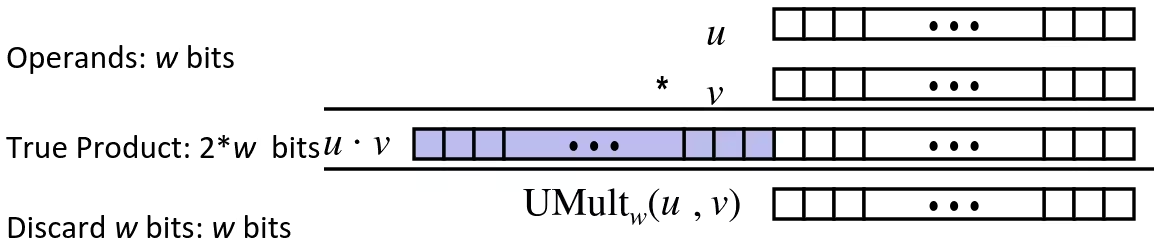

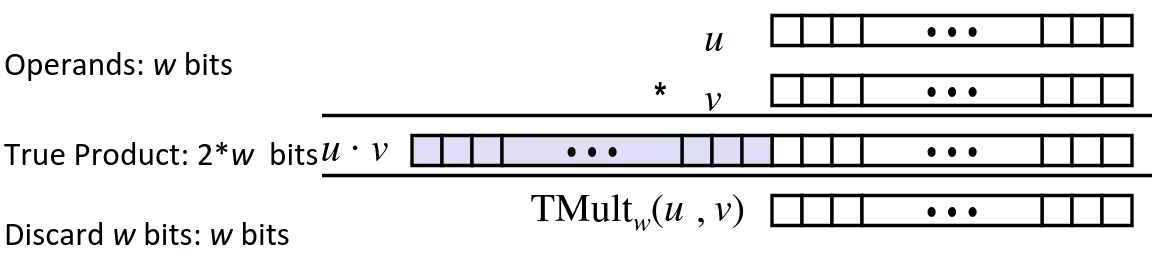

Multiplication

- Goal: Computing Product of -bit numbers

- Either signed or unsigned

- But, exact results can be bigger than bits

- Unsigned: up to bits

- Result range:

- Two’s complement min (negative): Up to bits

- Result range:

- Two’s complement max (positive): Up to bits, but only for

- Result range:

- Unsigned: up to bits

- So, maintaining exact results…

- would need to keep expanding word size with each product computed (exhaust memory faster)

- is done in software, if needed

- e.g., by “arbitrary precision” arithmetic packages

Unsigned Multiplication in C

- Standard Multiplication Function

- Ignore high order bits

- Implements Modular Arithmetic

Signed Multiplication in C

- Standard Multiplication Function

- Ignore high order bits

- Some of which are different for signed vs. unsigned multiplication

- Lower bits are the same

Power-of-2 Multiply with Shift

- Operation

u << kgives (basically increases each bits weight)- Both signed and unsigned

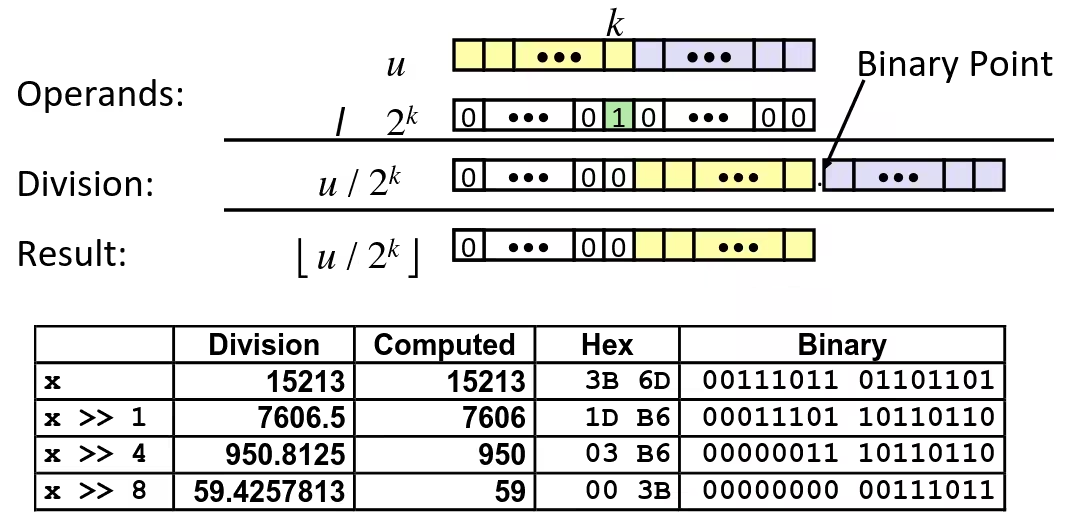

Unsigned Power-of-2 Divide with Shift

- Quotient of Unsigned by Power of 2

u >> kgives- Uses logical shift

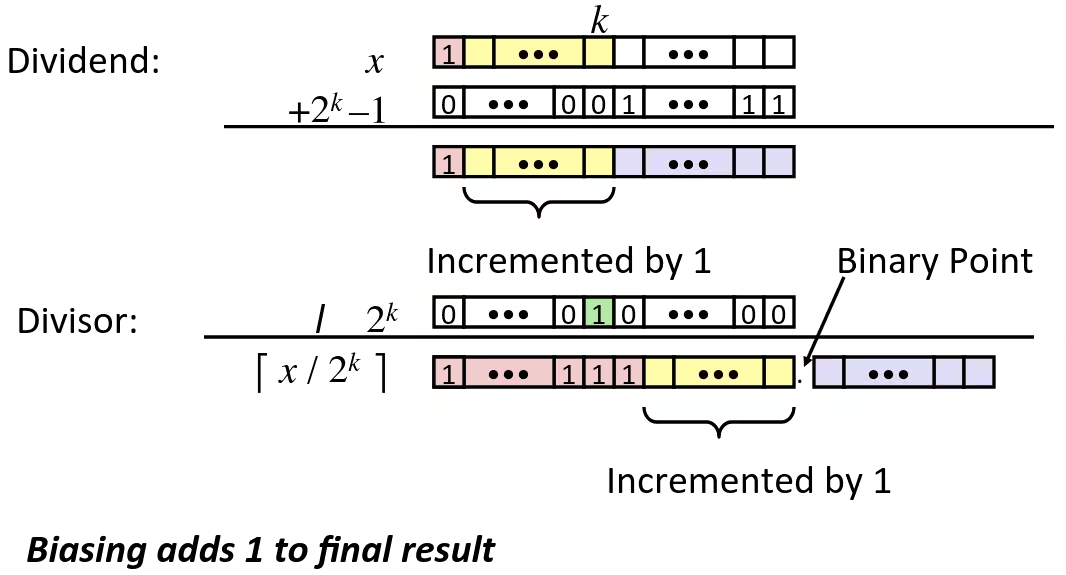

Signed Power-of-2 Divide with Shift

- Quotient of Signed by Power of 2

- Uses arithmetic shift

- Want (Round toward 0)

- Compute as

- In C:

(x + (1 << k) - 1) >> k - Biases dividend toward 0

- In C:

Case 1: No rounding

Case 2: Rounding

Handy tricks

If you want convert a value to its negative form by hand or in mind, either unsigned or signed.

Just do:

When Should I Use Unsigned ?

CAUTIONDon’t use without understanding implications!

- Do use when performing modular arithmetic

- Multiplication arithmetic

- Do use when using bits to represent sets

- Logical right shift, no sign extension

Representation in memory, pointers, strings

Byte-Oriented Memory Organization

- Programs refer to data by address

- Conceptually, envision it as a very large array of bytes

- In reality, it’s not, but can think of it that way

- An address is like an index into that array

- and, a pointer variable stores an address

- Conceptually, envision it as a very large array of bytes

- System provides private address spaces to each “process”

- So, a program can clobber its own data, but not that of others

Machine Words

- Any given computer has a “Word Size”

- Nominal size of integer-valued data

- and of addresses

- Until recently, most machines used 32 bits (4 bytes) as word size

- Limits addresses to 4 GB ( bytes)

- Increasingly, machines have 64‐bit word size

- Potentially, could have 18 EB (exabytes) of addressable memory

- That’s bytes

- Machines still support multiple data formats

- Fractions or multiples of word size

- Always integral number of bytes

- Nominal size of integer-valued data

Word‐Oriented Memory Organization

- Addresses Specify Byte Locations

- Address of first byte in word

- Addresses of successive words differ by 4 (32‐bit) or 8 (64‐bit)

Byte Ordering

- Big Endian: Sun, PPC Mac, Internet

- Least significant byte has highest address

- Little Endian: x86, ARM processors running Android, iOS, and Windows

- Least significant byte has lowest address

Representation Strings

In C, either little endian or big endian machine, strings in memory represented in the same way, because a string is essentially an array of characters ends with \x00, each character is one byte encoded in ASCII format and single byte (character) do not obey the byte ordering rule.

Floating Point

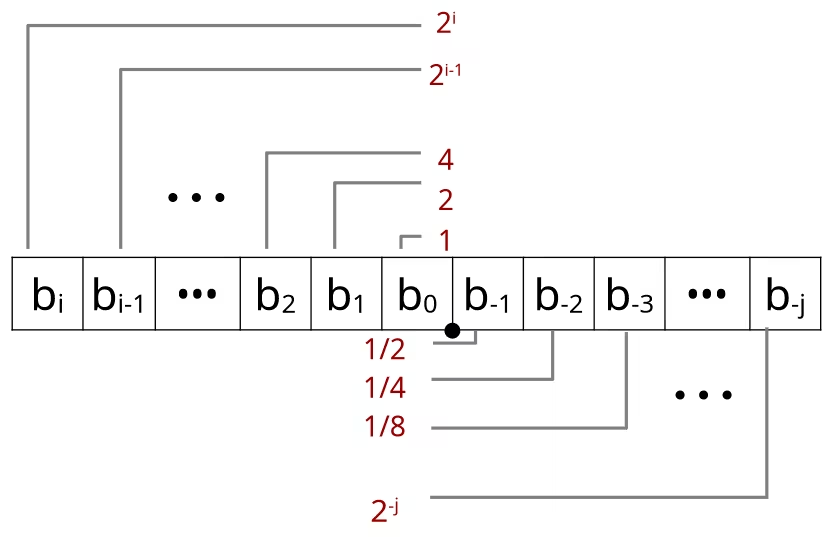

Background: Fractional binary numbers

Representing

- Bits to right of “binary point” represent fractional powers of 2

- Represents rational number:

| Value | Representation |

|---|---|

Observations

- Divide by 2 by shifting right (unsigned)

- Multiply by 2 by shifting left

- Numbers of form are just below

- Use notation ( depends on how many bits you have to the right of the binary point. If it gets smaller the more, the more of those bits you have there, and it gets closer to )

Limitation 1

- Can only exactly represent numbers of the form

- Other rational numbers have repeating bit representations, but cause computer system can only hold a finite number of bits, so

| Value | Representation |

|---|---|

Limitation 2

- Just one setting of binary point within the bits

- Limited range of numbers (very small values ? very large ? we have to move the binary point to represent sort of wide as wide a range as possible with as much precision given the number of bits)

Definition: IEEE Floating Point Standard

IEEE Standard 754

Established in 1985 as uniform standard for floating point arithmetic. Before that, many idiosyncratic formats.

Although it provided nice standards for rounding, overflow, underflow… It is hard to make fast in hardware (Numerical analysts predominated over hardware designers in defining standard)

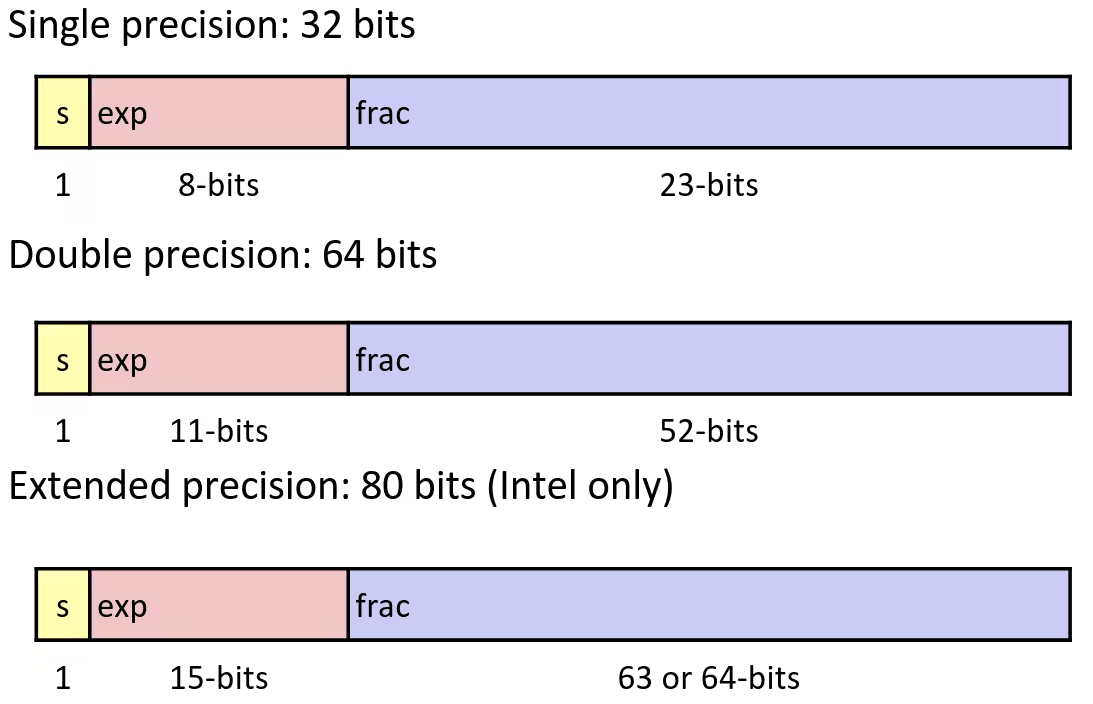

Floating Point Representation

Numerical form:

- Sign bit determines whether number is negative or positive

- Significand (Mantissa) normally a fractional value in range

- Exponent weights value by power of two

Encoding

- MSB

sis sign bit expfield encodes (but is not equal to )fracfield encodes (but is not equal to )

Normalized Values

- Condition: and

- Exponent coded as a biased value:

- is unsigned value of exp field

- , where is number of exponent bits

- Single precision: (, )

- Double precision: (, )

- Significand coded with implied leading :

- is bits of frac field

- Minimum when

- Maximum when

- Get extra leading bit for “free”

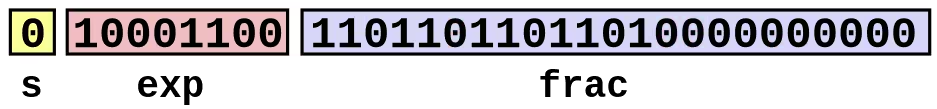

Normalized Encoding Example

- Value:

float F = 15213.0; - Significand

- Exponent

So the result would be:

# manually calculate((-1)**0)*(1+1/2+1/4+1/16+1/32+1/128+1/256+1/1024+1/2048+1/8192)*(2**13) == 15213.0

# by struct packageimport struct

bits = 0b01000110011011011011010000000000f = struct.unpack("f", struct.pack("I", bits))[0]

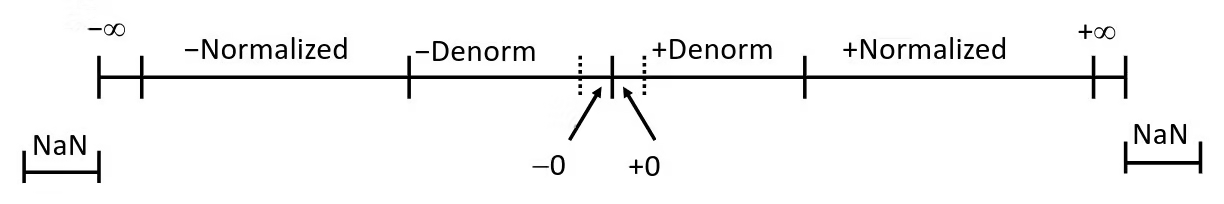

print(f)Denormalized Values

- Condition:

- Exponent value:

- Significand coded with implied leading :

- is bits of frac field

- Cases

-

- Represents zero value

- Note distinct values: and

-

- Numbers closet to

- Equispaced

-

Special Values

-

Condition:

-

Case:

- Represents value

- Operation that overflows

- Both positive and negative

- E.g.,

-

Case:

- Not-a-Number (NaN)

- Represents case when no numeric value can be determined

- E.g.,

Visualization: Floating Point Encodings

Example and properties

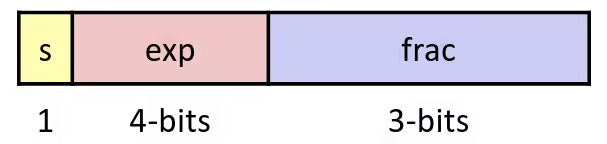

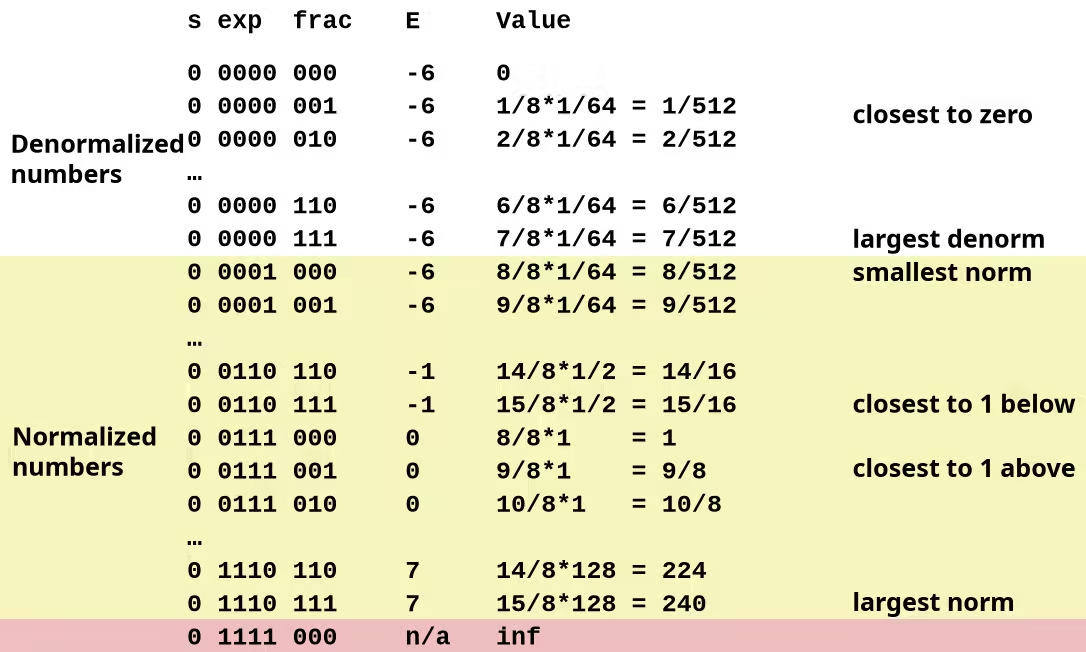

Tiny Floating Point Example

Think about this 8-bit Floating Point Representation below, it obeying the same general form as IEEE Format:

Dynamic Range (Positive Only)

Distribution of Values

Still think about our tiny example, notice how the distribution gets denser toward zero.

Here is a scaled close-up view:

Special Properties of the IEEE Encoding

- Floating Point Zero Same as Integer Zero

- All bits = 0

- Can (Almost) Use Unsigned Integer Comparison

- Must first compare sign bits

- Must consider

- NaNs problematic

- What should comparison yield ?

- Otherwise OK

- Denormalized vs. Normalized

- Normalized vs. Infinity

Rounding, addition, multiplication

Floating Point Operations: Basic Idea

Basic idea

- First compute exact result

- Make it fit into desired precision

- Possibly overflow if exponent too large

- Possibly round to fit into frac

Rounding

Rounding Modes (illustrate with $ rounding)

| $1.40 | $1.60 | $1.50 | $2.50 | -$1.50 | |

|---|---|---|---|---|---|

| Towards Zero | $1 | $1 | $1 | $2 | -$1 |

| Round Down () | $1 | $1 | $1 | $2 | -$2 |

| Round Up () | $2 | $2 | $2 | $3 | -$1 |

| Nearest Even (default) | $1 | $2 | $2 | $2 | -$2 |

Round to EvenIt means, if you have a value that’s less than half way then you round down, if more than half way, round up. When you have something that’s exactly half way, then what you do is round towards the nearest even number.

Closer Look at Round-To-Even

Default Rounding Mode

- Hard to get any other kind without dropping into assembly

- All others are statistically biased

- Sum of set of positive numbers will consistently be over or underestimated

Applying to Other Decimal Places / Bit Positions

- When exactly half way between two possible values

- Round so that least significant digit is even

E.g., round to nearest hundredth:

| Value | Rounded | |

|---|---|---|

| 7.8949999 | 7.89 | Less than half way |

| 7.8950001 | 7.90 | Greater than half way |

| 7.8950000 | 7.90 | Half way (round up) |

| 7.8850000 | 7.88 | Half way (rond down) |

Rounding Binary Numbers

Binary Fractional Numbers

- “Even” when least significant bit is 0

- “Half way” when bits to right of rounding position is

E.g., round to nearest (2 bits right of binary point)

| Value | Binary | Rounded | Action | Rounded Value |

|---|---|---|---|---|

| Less than half way (round down) | ||||

| Greater than half way (round up) | ||||

| Half way (round up) | ||||

| Half way (round down) |

Floating Point Multiplication

- Sign is ^

- Significand is

- Exponent is

Fixing

- If , shift right, increment

- If out of range, overflow

- Round to fit frac precision

Biggest chore in implementation is multiplying significands

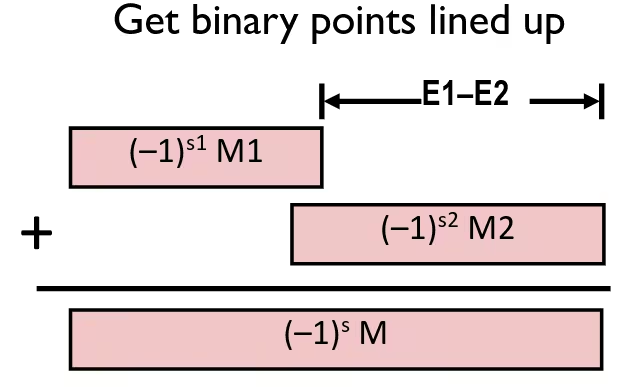

Floating Point Addition

(Assume )

- Sign , significand

- Result of signed align & add

- Exponent

Fixing

- If , shift right, increment

- If , shift left positions, decrement by

- Overflow if out of range

- Round to fit frac precision

Mathematical Properties of Floating Point Add

- Compare to those of Abelian Group

- Closed under addition ? (Yes)

- But may generate infinity or NaN

- Commutative ? (Yes)

- Associative ? (No)

- Overflow and inexactness of rounding

(3.14+1e10)-1e10 = 0, 3.14+(1e10-1e10) = 3.14

- is additive identity ? (Yes)

- Every element has additive inverse ? (Almost)

- Except for infinities & NaNs

- Closed under addition ? (Yes)

- Monotonicity

- ? (Almost)

- Except for infinities & NaNs

- ? (Almost)

Mathematical Properties of Floating Point Mult

- Compare to Commutative Ring

- Closed under multiplication ? (Yes)

- But may generate infinity or NaN

- Multiplication Commutative ? (Yes)

- Multiplication is Associative ? (No)

- Possibility of overflow, inexactness of rounding

(1e20*1e20)*1e-20 = inf, 1e20*(1e20*1e-20) = 1e20

- is multiplicative identity ? (Yes)

- Multiplication distributes over addition ? (No)

- Possibility of overflow, inexactness of rounding

1e20*(1e20-1e20) = 0.0, 1e20*1e20-1e20*1e20 = NaN

- Closed under multiplication ? (Yes)

- Monotonicity

- ? (Almost)

- Except for infinities & NaNs

- ? (Almost)

Floating Point in C

Conversions / Casting

CAUTIONCasting between

int,float, anddoublechanges bit representation!

double/floattoint- Truncates fractional part

- Like rounding toward zero

- Not defined when out of range or : Generally sets to

inttodouble- Exact conversion, as long as

inthas bit word size

- Exact conversion, as long as

inttofloat- Will round according to rounding mode

Machine-Level Programming

History of Intel processors and architectures

Nobody (at least me), can keep these long history in mind! So I’ll just skipping this part to save life~

C, Assembly, Machine Code

Definitions

- Architecture: (also ISA: instruction set architecture) The parts of a processor design that one needs to understand or write assembly/machine code

- Examples: instruction set specification, registers

- Microarchitecture: Implementation of the architecture

- Examples: cache sizes and core frequency

Assembly / Machine Code View

Turning C into Object Code

- Code in files

p1.cp2.c - Compile with command:

gcc -Og p1.c p2.c -o p- Use basic optimizations (

-Og) [New to recent versions of GCC] - Put resulting binary in file

p

- Use basic optimizations (

Assembly Characteristics: Data Types

- “Integer” data of 1, 2, 4 or 8 bytes

- Data values

- Address (untyped pointers)

- Floating Point data of 4, 8 or 10 bytes

- Code: Byte sequences encoding series of instructions

- No aggregate types such as arrays or structures

- Just contiguously allocated bytes in memory

Assembly Characteristics: Operations

- Perform arithmetic function on register or memory data

- Transfer data between memory and register

- Load data from memory into register

- Store register data into memory

- Transfer control

- Conditional branches

- Unconditional jumps to/from procedures

Object Code

- Assembler

- Translates

.sinto.o - Binary encoding of each instruction

- Nearly-complete image of executable code

- Missing linkages between code in different files

- Translates

- Linker

- Resolves references between files

- Combines with static run-time libraries

- E.g., code for

malloc,printf

- E.g., code for

- Some libraries are dynamically linked

- Linking occurs when program begins execution

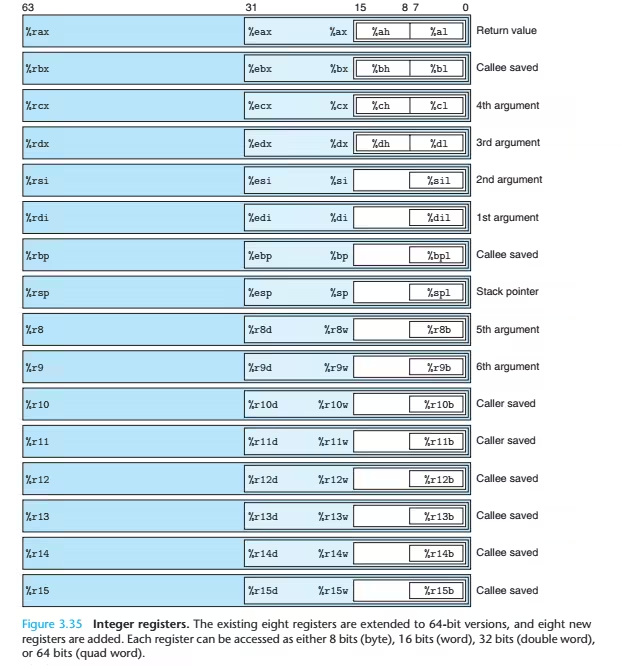

Assembly Basics: Registers, Operands, Move

x86‐64 Integer Registers

Moving Data

movq src, dst- Operand Types

- Immediate: Constant integer data

- E.g.,

$0x400,$-533 - Like C constant, but prefixed with

$ - Encoded with 1, 2, or 4 bytes

- E.g.,

- Register: One of 16 integer registers

- E.g.,

%rax,%r13 - But

%rspreserved for special use - Others have special uses for particular instructions

- E.g.,

- Memory: 8 consecutive bytes of memory at address given by register

- Simplest example:

(%rax) - Various other “address modes”

- Simplest example:

- Immediate: Constant integer data

Complete Memory Addressing Modes

D(Rb, Ri, S),Mem[Reg[Rb] + S * Reg[Ri] + D]D: Constant “displacement” 1, 2, or 4 bytesRb: Base register: Any of 16 integer registersRi: Index register: Any, except for%rspS: Scale (1, 2, 4, or 8)

- Special Cases

(Rb, Ri):Mem[Reg[Rb] + Reg[Ri]]D(Rb, Ri):Mem[Reg[Rb] + Reg[Ri] + D](Rb, Ri, S):Mem[Reg[Rb] + S * Reg[Ri]]

Arithmetic & Logical Operations

Address Computation Instruction

leaq src, dstsrcis address mode expression- Set

dstto address denoted by expression

- Uses

- Computing addresses without a memory reference

- E.g., translation of

p = &x[i];

- E.g., translation of

- Computing arithmetic expressions of the form

x + k * ykequals to 1, 2, 4, or 8

- Computing addresses without a memory reference

Here is an example of computing arithmetic expression with leaq:

long m12(long x) { return x * 12;}Converted to ASM by compiler:

leaq (%rdi, %rdi, 2), %rax # t <- x + x * 2salq $2, %rax # return t << 2A bit more complex one:

long arith(long x, long y, long z) { long t1 = x + y; long t2 = z + t1; long t3 = x + 4; long t4 = y * 48; long t5 = t3 + t4; long rval = t2 * t5;

return rval;}arith: leaq (%rdi, %rsi), %rax # t1 addq %rdx, %rax # t2 leaq (%rsi, %rsi, 2), %rdx salq $4, %rdx # t4 leaq 4(%rdi, %rdx), %rcx # t5 imulq $rcx, %rax # rval retControl: Condition Codes

Condition Codes (Implicit Setting)

Think of it as a side effect by arithmetic operations.

leaq won’t effect flags.

Condition Codes (Explicit Setting)

- Compare Instrucion

cmpq src2, src1: computingsrc1 - src2without setting destination

- Test Instruction

testq src2, src1: computingsrc1 & src2without setting destination- Useful to have one of the operands be a mask

Reading Condition Codes

setXInstructions- Set low‐order byte of destination (must one of addressable byte registers) to 0 or 1 based on combinations of condition codes

- Does not alter remaining 7 bytes

- Typically use

movzbl(Move with Zero-Extend from Byte to Long) to finish job- 32‐bit instructions also set upper 32 bits to 0

- Typically use

NOTEAny computation where the result is a 32-bit result, it will zero out the higher 32-bits of the register. And its different for example the byte level operations only affect the bytes, the word operations only affect two bytes.

| setX | Condition | Description |

|---|---|---|

sete | ZF | Equal / Zero |

setne | ~ZF | Not Equal / Not Zero |

sets | SF | Negative |

setns | ~SF | Nonnegative |

setg | ~(SF ^ OF) & ~ZF | Greater (Signed) |

setge | ~(SF ^ OF) | Greater or Equal (Signed) |

setl | (SF ^ OF) | Less (Signed) |

setle | (SF ^ OF) | ZF | Less or Equal (Signed) |

seta | ~CF & ~ZF | Above (Unsigned) |

setb | CF | Below (Unsigned) |

NOTE

sil,dil,spl,bplare all 1 byte registers.

int gt(long x, long y) { return x > y;}cmpq %rsi, %rdi # Compare x:ysetg %al # Set %al to 1 when >movzbl %al, %eax # Zero rest of %raxretConditional Branches

Jumping

jXInstructions- Jump to different part of code depending on condition codes

| jX | Condition | Description |

|---|---|---|

jmp | 1 | Unconditional |

je | ZF | Equal / Zero |

jne | ~ZF | Not Equal / Not Zero |

js | SF | Negative |

jns | ~SF | Nonnegative |

jg | ~(SF ^ OF) & ~ZF | Greater (Signed) |

jge | ~(SF ^ OF) | Greater or Equal (Signed) |

jl | SF ^ OF | Less (Signed) |

jle | (SF ^ OF) | ZF | Less or Equal (Signed) |

ja | ~CF & ~ZF | Above (Unsigned) |

jb | CF | Below (Unsigned) |

Using Conditional Moves

- Conditional Move Instructions

- Instruction supports:

if (Test) Dest <- Src - Supported in post-1995 x86 processors

- GCC tries to use them (but, only when known to be safe)

- Branches are very disruptive to instruction flow through pipelines

- Conditional moves do not require control transfer

- Instruction supports:

Here is a simple example of conditional move:

long absdiff(long x, long y) { long result; if (x > y) result = x - y; else result = y - x; return result;}absdiff: movq %rdi, %rax # x subq %rsi, %rax # result = x - y movq %rsi, %rdx subq %rdi, %rdx # eval = y - x cmpq %rsi, %rdi # x:y cmovle %rdx, %rax # if <=, result = eval retBad Cases for Conditional Move

- Expensive Computations:

val = Test(x) ? Hard1(x) : Hard2(x);- Both values get computed

- Only makes sense when computations are very simple

- Risky Computations:

val = p ? *p : 0;- Both values get computed

- May have undesirable effects

- Computations with side effects:

val = x > 0 ? x *= 7 : x += 3;- Both values get computed

- Must be side-effect free

Loops

IMPORTANTEach kind of loop will convert to their

gotoversion and then assembly code.

”Do-While” Loop

C Code:

long pcount_do(unsigned long x) { long result = 0; do { result += x & 0x1; x >>= 1; } while (x); return result;}Goto Version:

long pcount_goto(unsigned long x) { long result = 0;loop: result += x & 0x1; x >>= 1; if (x) goto loop; return result;}Assembly Code:

movl $0, %eax # result = 0.L2: # loop: movq %rdi, %rdx andl $1, %edx # t = x & 0x1 addq %rdx, %rax # result += t shrq %rdi # x >>= 1 jne .L2 # if (x) goto loop rep; retGeneral “Do-While” Translation

do { Body} while (Test);loop: Body if (Test) goto loop”While” Loop

General “While” Translation 1 (“Jump‐to-middle” translation, compiled with -Og)

while (Test) { Body} goto testloop: Bodytest: if (Test) goto loopdone:General “While” Translation 2 (Compiled with -O1)

while (Test) { Body}To Do-While Version:

if (!Test) goto done; do { Body } while (Test);done:Goto Version:

if (!Test) goto done;loop: Body if (Test) goto loop;done:The initial conditional guards entrance to loop.

”For” Loop

”For” Loop “While” Conversion

For Version:

for (Init; Test; Update) BodyWhile Version:

Init;while (Test) { Body Update;}“For” Loop “Do-While” Conversion

Initial test can be optimized away.

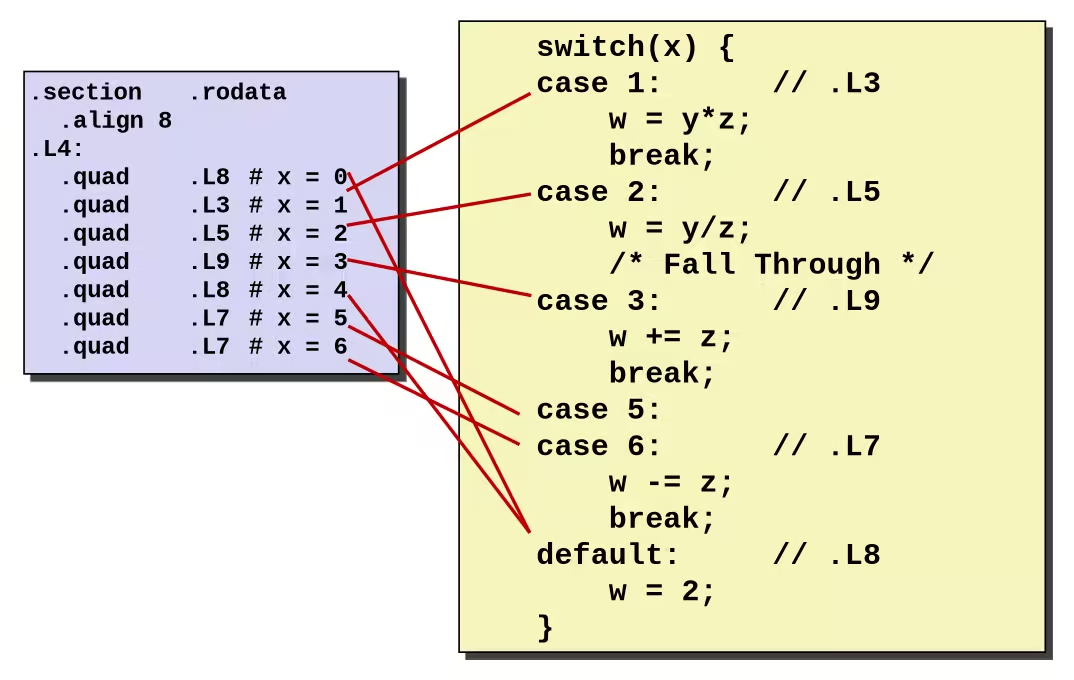

Switch Statements

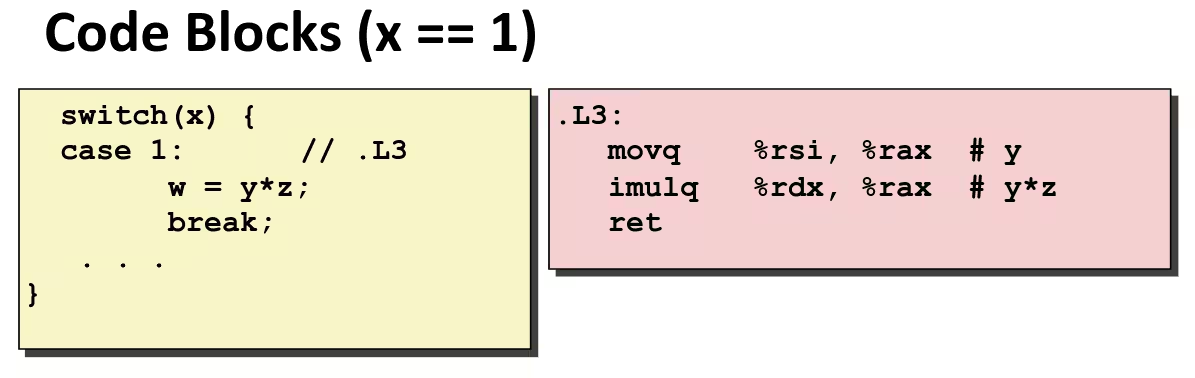

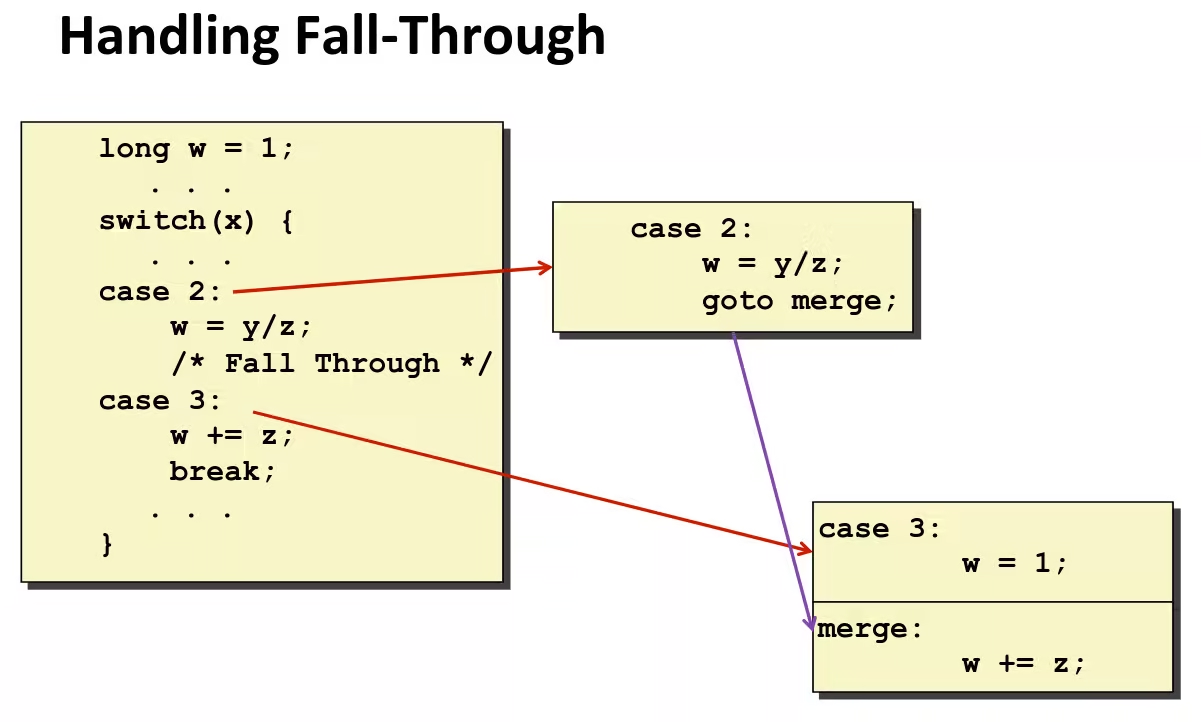

Switch Statement Example

long switch_eg(long x, long y, long z) { long w = 1; switch (x) { case 1: w = y * z; break; case 2: w = y / z; /* Fall Through */ case 3: w += z; break; case 5: case 6: w -= z; break; default: w = 2; } return w;}- Multiple case labels: 5 & 6

- Fall through cases: 2

- Missing cases: 4

Switch Statement Example in Assembly

switch_eg: movq %rdx, %rcx cmpq $6, %rdi # x:6 ja .L8 # use default jmp *.L4(, %rdi, 8) # goto *JTab[x]Note that w not initialized here.

TIP

ja .L8considering the result is unsigned, so its a smart way to tackle negative number.

Jump Table Structure

Jump Table

.section .rodata .align 8.L4: .quad .L8 # x = 0 .quad .L3 # x = 1 .quad .L5 # x = 2 .quad .L9 # x = 3 .quad .L8 # x = 4 .quad .L7 # x = 5 .quad .L7 # x = 6- Table Structure

- Each target requires 8 bytes

- Base address at

.L4

- Jumping

- Direct:

jmp .L8- Jump target is denoted by label

.L8

- Jump target is denoted by label

- Indirect:

jmp *.L4(, %rdi, 8)- Start of jump table:

.L4 - Must scale by factor of 8 (addresses are 8 bytes)

- Fetch target from effective address

.L4 + %rdi * 8- Only for

0 <= x <= 6

- Only for

- Start of jump table:

- Direct:

Procedures

Stack Structure

x86-64 Stack

- Region of memory managed with stack discipline

- Grows toward lower addresses

- Register

%rspcontains lowest stack address- address of “top” element

Push

pushq src- Fetch operand at

src - Decrement

%rspby 8 - Write operand at address given by

%rsp

- Fetch operand at

Pop

popq dest- Read value at address given by

%rsp - Increment

%rspby 8 - Store value at

dest(must be register)

- Read value at address given by

Calling Conventions

Procedure Control Flow

- Use stack to support procedure call and return

- Procedure call:

call label- Push return address (address of the next instruction right after call) on stack

- Jump to label

- Procedure return:

ret- Pop address from stack

- Jump to address

Managing local data

- First 6 arguments are passing by registers:

%rdi,%rsi,%rdx,%rcx,%r8,%r9, other more arguments will store in stack - Only allocate stack space when needed

- Return value store in

%rax

Stack Frames

- Contents

- Return information

- Local storage (if needed)

- Temporary space (if needed)

- Management

- Space allocated when enter procedure

- “Set-up” code

- Includes push by

callinstruction

- Deallocated when return

- “Finish” code

- Includes pop by

retinstruction

- Space allocated when enter procedure

x86-64 / Linux Stack Frame

-

Current Stack Frame (“Top” to Bottom)

- Argument build: Parameters for function about to call

-

Local variables

- If can’t keep in registers

-

Saved register context

-

Old frame pointer (optional)

-

Caller Stack Frame

- Return address

- Arguments for this call

NOTEAs we often see the program often allocates more space on the stack than it really needs to, its because some conventions about trying to keep addresses on aligned.

Register Saving Conventions

- Caller Saved

- Caller saves temporary values in its frame before the call

- Callee Saved

- Callee saves temporary values in its frame before using

- Callee restores them before returning to caller

x86-64 Linux Register Usage 1

%rax- Return value

- Also caller-saved

- Can be modified by procedure

%rdi,%rsi,%rdx,%rcx,%r8,%r9- Arguments

- Also caller-saved

- Can be modified by procedure

%r10,%r11- Caller-saved

- Can be modified by procedure

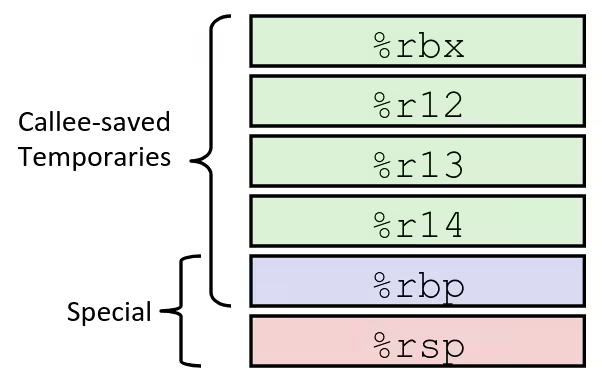

x86-64 Linux Register Usage 2

%rbx,%r12,%r13,%r14- Callee-saved

- Callee must save & restore

%rbp- Callee-saved

- Callee must save & restore

- May be used as frame pointer

- Can mix & match

%rsp- Special form of callee save

- Restored to original value upon exit from procedure

Recursion Example

long pcount_r(unsigned long x) { if (x == 0) return 0; else: return (x & 1) + pcount_r(x >> 1);}pcount_r: movl $0, %eax testq %rdi, %rdi je .L6 pushq %rbx movq %rdi, %rbx andl $1, %ebx shrq %rdi call pcount_r addq %rbx, %rax popq %rbx.L6: rep; ret- Handled Without Special Consideration (just using normal calling conventions)

- Stack frames mean that each function call has private storage

- Saved registers & local variables

- Saved return pointer

- Register saving conventions prevent one function call from corrupting another’s data

- Unless the C code explicitly does so

- Stack discipline follows call / return pattern

- If P calls Q, then Q returns before P

- Last-In, First-Out

- Stack frames mean that each function call has private storage

- Also works for mutual recursion

- P calls Q; Q calls P

Arrays

One-dimensional Array

Array Allocation

T A[L];- Array of data type

Tand lengthL - Contiguously allocated region of

L * sizeof(T)bytes in memory

- Array of data type

Array Access

T A[L];- Array of data type

Tand lengthL - Identifier

Acan be used as a pointer to array element 0: TypeT *

- Array of data type

Array Accessing Example

#define ZLEN 5

typedef int zip_dig[ZLEN];

int get_digit(zip_dig z, int digit) { return z[digit];}movl (%rdi, %rsi, 4), %eax # z[digit]- Register

%rdicontains starting address of array - Register

%rsicontains array index - Desired digit at

%rdi + 4 * %rsi

Array Loop Example

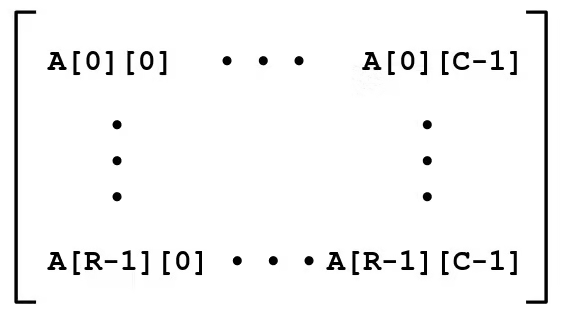

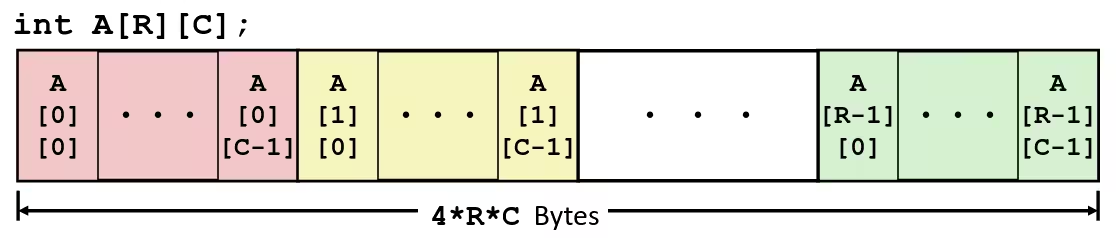

void zincr(zip_dig z) { size_t i; for (i = 0; i < ZLEN; i++) { z[i]++; }} movl $0, %eax # i = 0 jmp .L3 # goto middle.L4: # loop: addl $1, (%rdi, %rax, 4) # z[i]++ addq $1, %rax # i++.L3: # middle cmpq $4, %rax # i:4 jbe .L4 # if <=, goto loop rep; retMultidimensional (Nested) Arrays

T A[R][C]- 2D array of data type

T Rrows,Ccolumns- Type

Telement requiresKbytes

- 2D array of data type

- Array Size

R * C * Kbytes

- Arrangement

- Row-Major Ordering

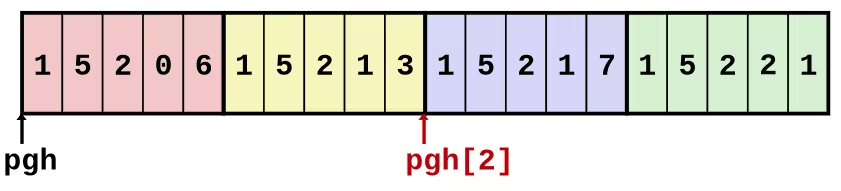

Nested Array Example

#define ZLEN 5#define PCOUNT 4

typedef int zip_dig[ZLEN];

zip_dig pgh[PCOUNT] = { {1, 5, 2, 0, 6}, {1, 5, 2, 1, 3}, {1, 5, 2, 1, 7}, {1, 5, 2, 2, 1}}zip_dig pgh[4] is equivalent to int pgh[4][5].

Nested Array Row Access

- Row Vectors

A[i]is array ofCelements- Each element of type

TrequiresKbytes - Starting address

A + i*(C*K)

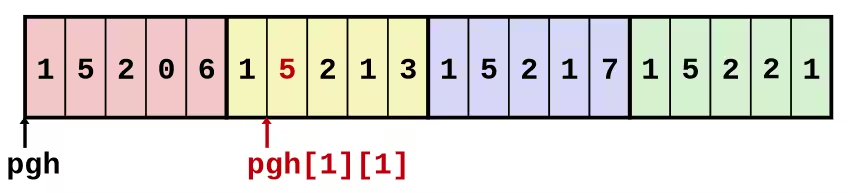

Nested Array Row Access Example

int *get_pgh_zip(int index) { return pgh[index];}leaq (%rdi, %rdi, 4), %rax # 5*indexleaq pgh(, %rax, 4), %rax # pgh + (20*index)- Row Vector

pgh[index]is array of 5int’s- Starting address

pgh + 20*index

- Machine Code

- Computes and returns address

- Compute as

pgh + 4*(index + 4*index)

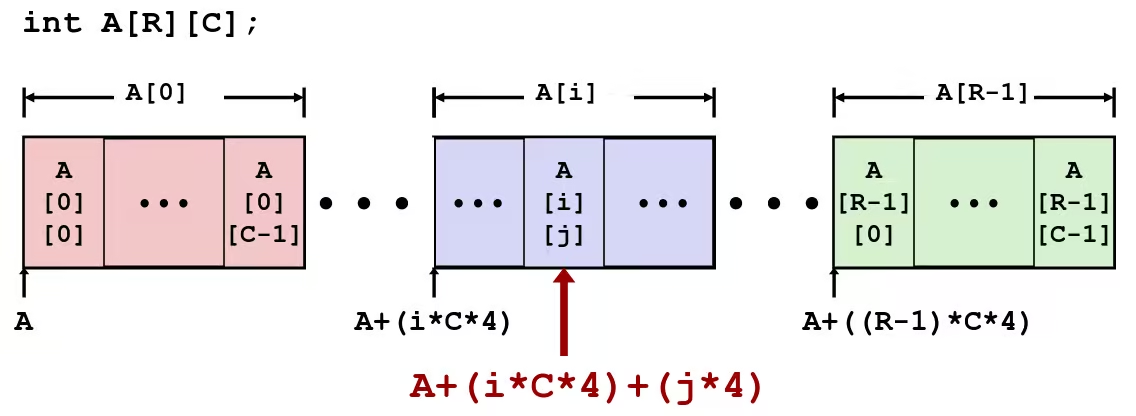

Nested Array Element Access Example

- Array Elements

A[i][j]is element of typeT, which requiresKbytes- Address

A + i*(C*K) + j*K = A + (i*C + j)*K

Nested Array Element Access Example

int get_pgh_digit(int index, int dig) { return pgh[index][dig];}leaq (%rdi, %rdi, 4), %rax # 5*indexaddl %rax, %rsi # 5*index + digmovl pgh(, %rsi, 4), %eax # M[pgh + 4*(5*index + dig)]- Array Elements

pgh[index][dig]isint- Address:

Mem[pgh + 20*index + 4*dig] = Mem[pgh + 4*(5*index + dig)]

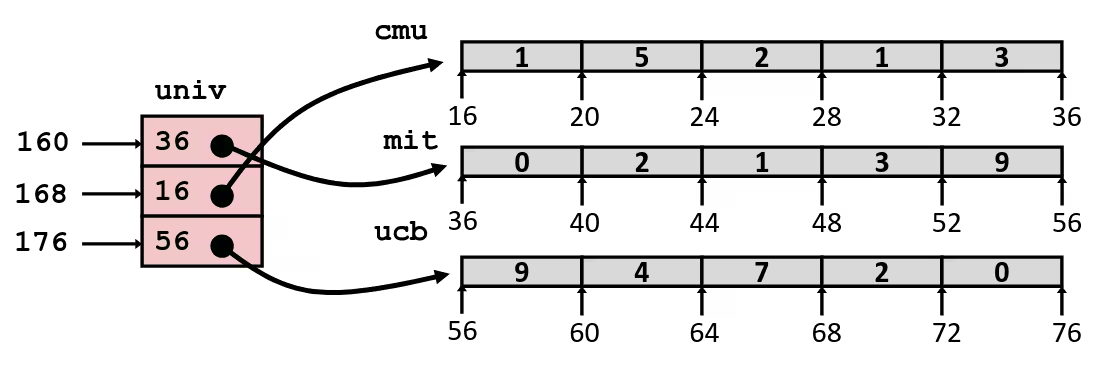

Multi-Level Array

zip_dig cmu = {1, 5, 2, 1, 3};zip_dig mit = {0, 2, 1, 3, 9};zip_dig ucb = {9, 4, 7, 2, 0};

#define UCOUNT 3

int *univ[UCOUNT] = {mit, cmu, ucb};- Variable

univdenotes array of 3 elements - Each element is a pointer (8 bytes)

- Each pointer points to array of

int’s

Element Access in Multi-Level Array

int get_univ_digit(size_t index, size_t digit) { return univ[index][digit];}salq $2, %rsi # 4*digitaddq univ(, %rdi, 8), %rsi # p = univ[index] + 4*digitmovl (%rsi), %eax # return *pret- Element access

Mem[Mem[univ + 8*index] + 4*digit] - Must do two memory reads

- First get pointer to row array

- Then access element within array

N x N Matrix

- Fixed dimensions

- Know value of

Nat compile time

- Know value of

#define N 16

typedef int fix_matrix[N][N];

/* Get element A[i][j] */int fix_ele(fix_matrix A, size_t i, size_t j) { return A[i][j];}- Variable dimensions, explicit indexing

- Traditional way to implement dynamic arrays

#define IDX(n, i, j) ((i)*(n)+(j))

/* Get element A[i][j] */int vec_ele(size_t n, int *A, size_t i, size_t j) { return A[IDX(n,i,j)];}- Variable dimensions, explicit indexing

- Now supported by gcc

/* Get element A[i][j] */int var_ele(size_t n, int A[n][n], size_t i, size_t j) { return A[i][j];}N x N Matrix Access

- Array Elements

size_t n;int A[n][n];- Address

A + i*(C*K) + j*K C = n, K = 4- Must perform integer multiplication

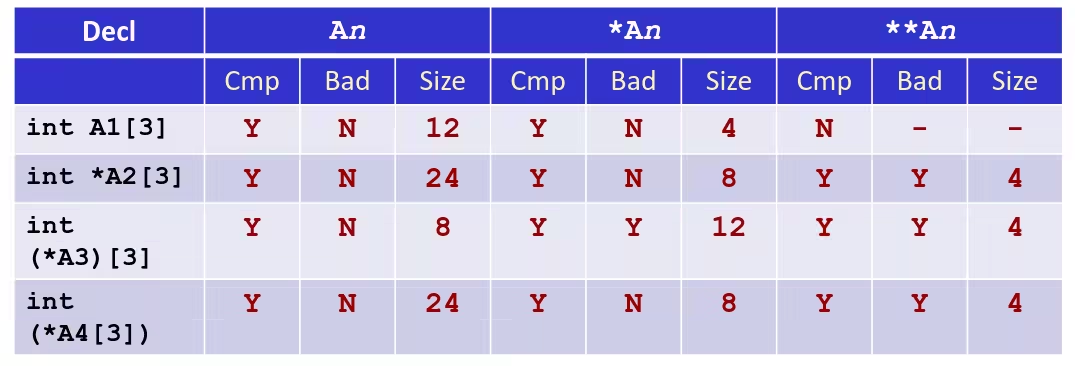

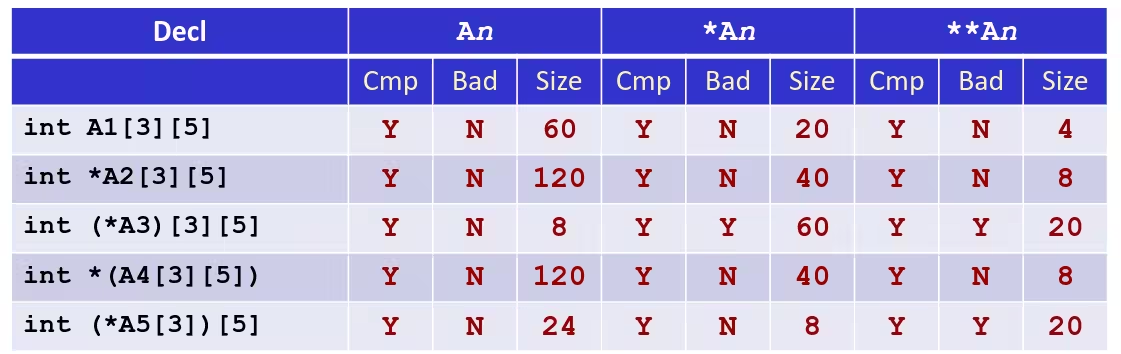

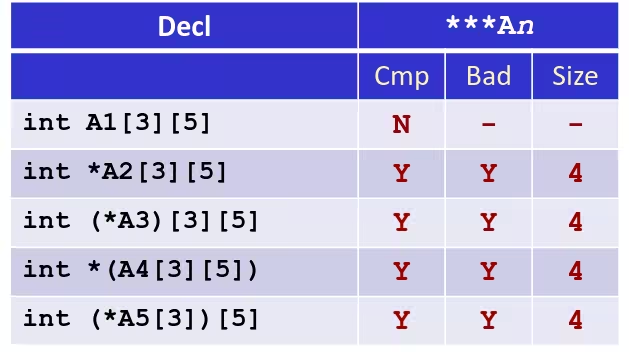

/* Get element A[i][j] */int var_ele(size_t n, int A[n][n], size_t i, size_t j) { return A[i][j];}imulq %rdx, %rdi # n*ileaq (%rsi, %rdi, 4), %rax # A + 4*n*imovl (%rax, %rcx, 4), %eax # A + 4*n*i + 4*jretUnderstanding Pointers & Array

- Cmp: Compiles (Y/N)

- Bad: Possible bad pointer reference (Y/N)

- Size: Value returned by

sizeof

Structures

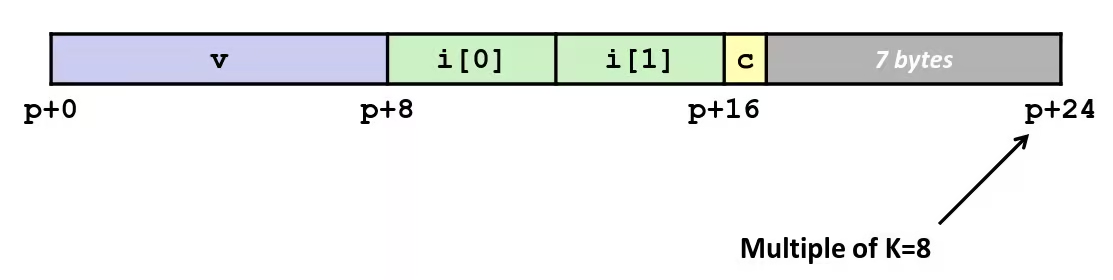

Allocation

- Structure represented as block of memory

- Big enough to hold all of the fields

- Fields ordered according to declaration

- Even if another ordering could yield a more compact representation

- Compiler determines overall size + positions of fields

- Machine-level program has no understanding of the structures in the source code

Access

Generating Pointer to Structure Member

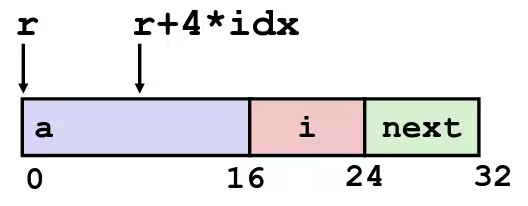

struct rec { int a[4]; size_t i; struct rec *next;};

int *get_ap(struct rec *r, size_t idx) { return &r->a[idx];}leaq (%rdi, %rsi, 4), %raxret

- Offset of each structure member determined at compile time

- Compute as

r + 4*idx

Following Linked List

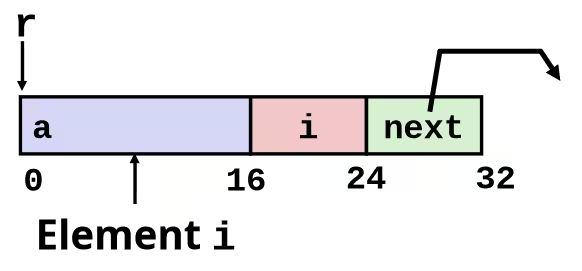

struct rec { int a[4]; int i; struct rec *next;};

void set_val(struct rec *r, int val) { while (r) { int i = r->i; r->a[i] = val; r = r->next; }}.L11: # loop: movslq 16(%rdi), %rax # i = M[r + 16] movl %esi, (%rdi, %rax, 4) # M[r + 4*i] = val movq 24(%rdi), %rdi # r = M[r + 24] testq %rdi, %rdi # Test r jne .L11 # if != 0 goto loop

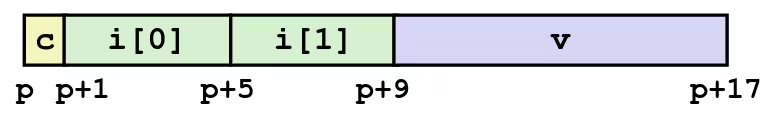

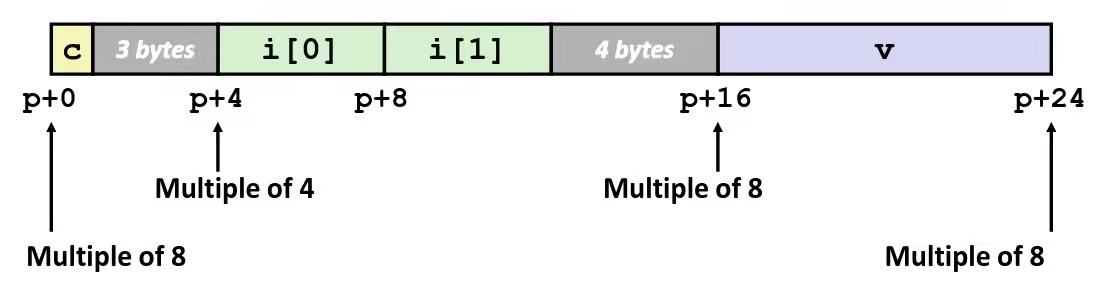

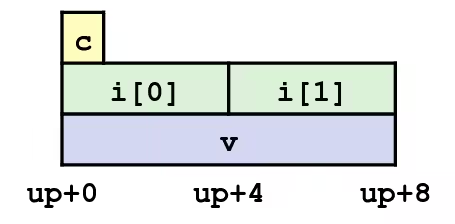

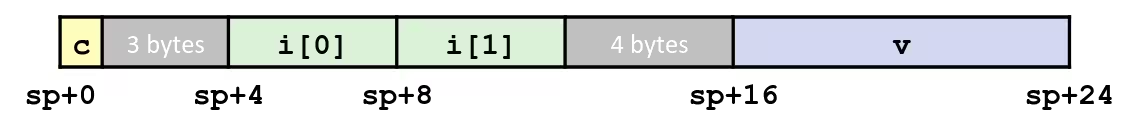

Alignment

struct S1 { char c; int i[2]; double v;} *p;- Unaligned Data

- Aligned Data

- Primitive data type requires

Kbytes - Address must be multiple of

K - Required on some machines; advised on x86-64

- Primitive data type requires

-

Motivation for Aligning Data

- Memory accessed by (aligned) chunks of 4 or 8 bytes (system dependent)

- Inefficient to load or store datum that spans quad word boundaries

- Virtual memory trickier when datum spans 2 pages

- Memory accessed by (aligned) chunks of 4 or 8 bytes (system dependent)

-

Compiler

- Inserts gaps in structure to ensure correct alignment of fields

Specific Cases of Alignment (x86-64)

- 1 byte:

char, …- no restrictions on address

- 2 bytes:

short, …- lowest 1 bit of address must be

- 4 bytes:

int,float, …- lowest 2 bits of address must be

- 8 bytes:

double,long,char *, …- lowest 3 bits of address must be

IMPORTANTIf an address requires alignment to bytes, then its lowest bits must be .

Satisfying Alignment with Structures

-

Within structure

- Must satisfy each element’s alignment requirement

-

Overall structure placement

- Each structure has alignment requirement

KKis largest alignment of any element

- Initial address & structure length must be multiples of

K

- Each structure has alignment requirement

-

Example

struct S2 { double v; int i[2]; char c;} *p;

Arrays of Structures

- Overall structure length multiple of

K - Satisfy alignment requirement for every element

struct S2 { double v; int i[2]; char c;} a[10];

Accessing Array Elements

- Compute array offset

12*idxsizeof(S3), including alignment spacers

- Element

jis at offset 8 within structure - Assembler gives offset

a+8- Resolved during linking

struct S3 { short i; float v; short j;} a[10];

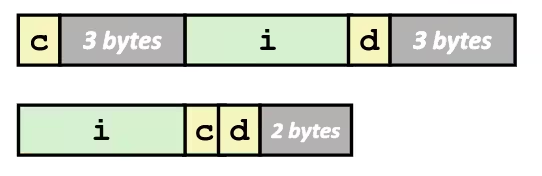

short get_j(int idx) { return a[idx].j;}leaq (%rdi, %rdi, 2), %rax # 3*idxmovzwl a+8(, %rax, 4), %eaxSaving Space

- Put large data types first

struct S4 { char c; int i; char d;} *p;struct S5 { int i; char c; char d;} *p;

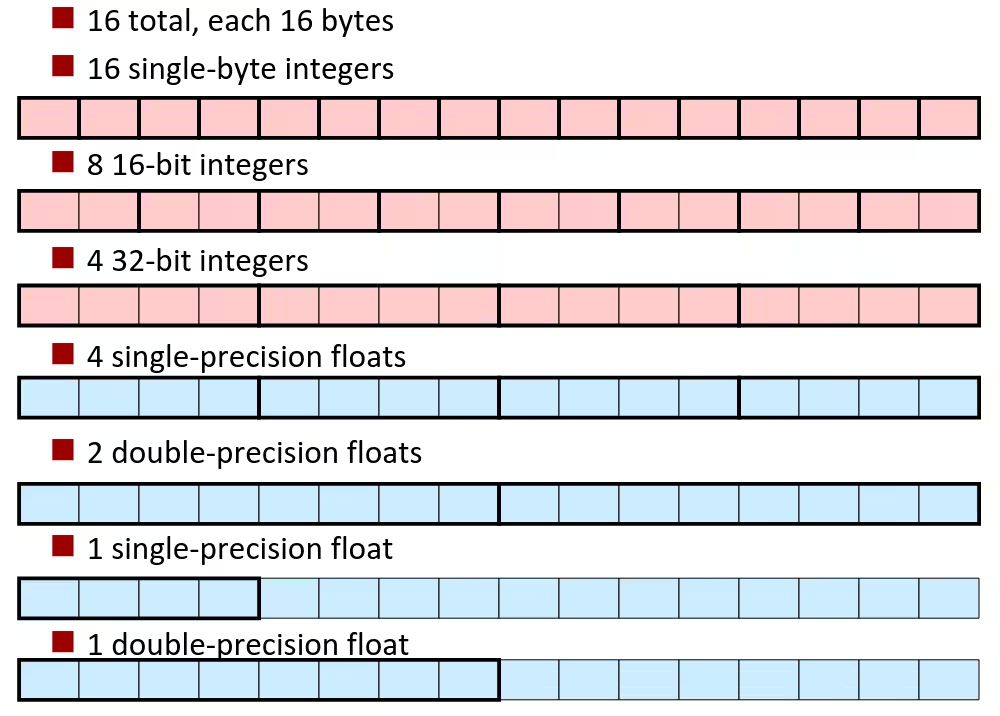

Floating Point

x87 FP

- Legacy, very ugly

SSE3 FP

XMM Registers

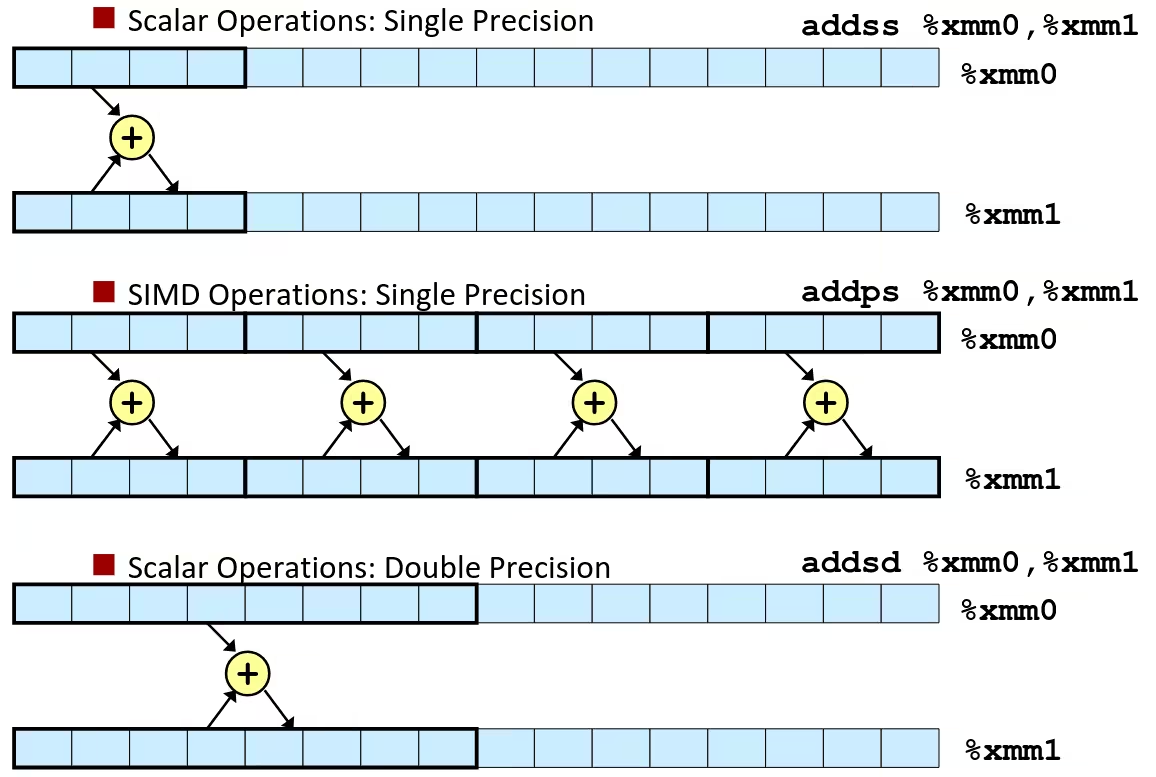

Scalar & SIMD Operations

SIMD (Single instruction, multiple data), there are a ton of usage combinations, e.g.:

addss (ADD Scalar Single-Precision Floating-Point Values)addps (ADD Packed Single-Precision Floating-Point Values)

Basics

- Arguments passed in

%xmm0,%xmm1, … - Result returned in

%xmm0 - All

XMMregisters caller-saved

float fadd(float x, float y) { return x + y;}addss %xmm1, %xmm0retdouble dadd(double x, double y) { return x + y;}addsd %xmm1, %xmm0retMemory Referencing

- Integer (and pointer) arguments passed in regular registers

- Floating Point values passed in

XMMregisters - Different

movinstructions to move betweenXMMregisters, and between memory andXMMregisters

double dincr(double *p, double v) { double x = *p; *p = x + v; return x;}movapd %xmm0, %xmm1 # Copy vmovsd (%rdi), %xmm0 # x = *paddsd %xmm0, %xmm1 # t = x + vmovsd %xmm1, (%rdi) # *p = tretmovapd (Move Aligned Packed Double-Precision Floating-Point Values)

Other Aspects of Floating Point Instructions

- Lots of instructions

- Different operations, different formats, …

- Floating-point comparisons

- Instructions

ucomiss (Unordered Compare Scalar Single-Precision)anducomisd (Unordered Compare Scalar Double-Precision) - Set condition codes

CF,ZF, andPF

- Instructions

- Using constant values

- Set

XMM0register to 0 with instructionxorpd %xmm0, %xmm0 - Others loaded from memory

- Set

AVX FP

- Newest version

- Similar to SSE

Floating Point assembly instruction sets are very nasty, though it’s principle thought is simple. So I am just skipping this chapter as TODO. Bro really don’t want learn this chapter…

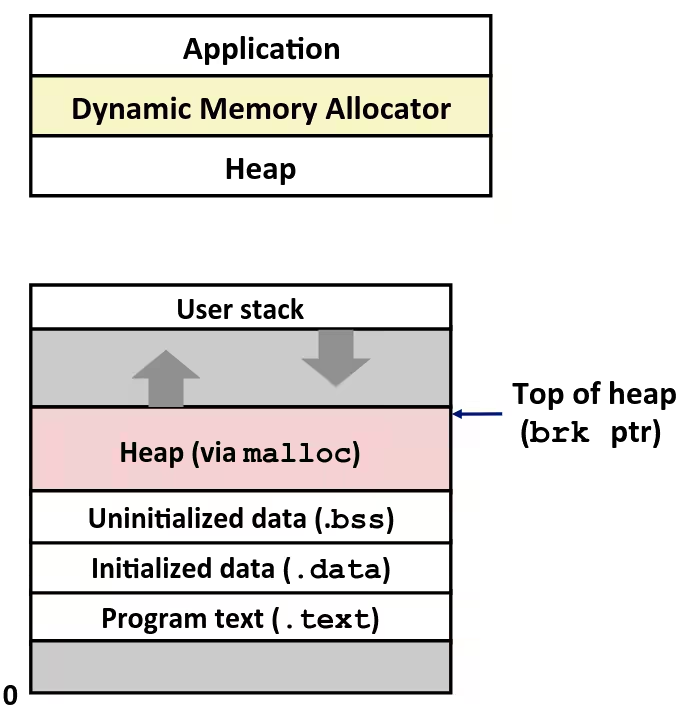

Memory Layout

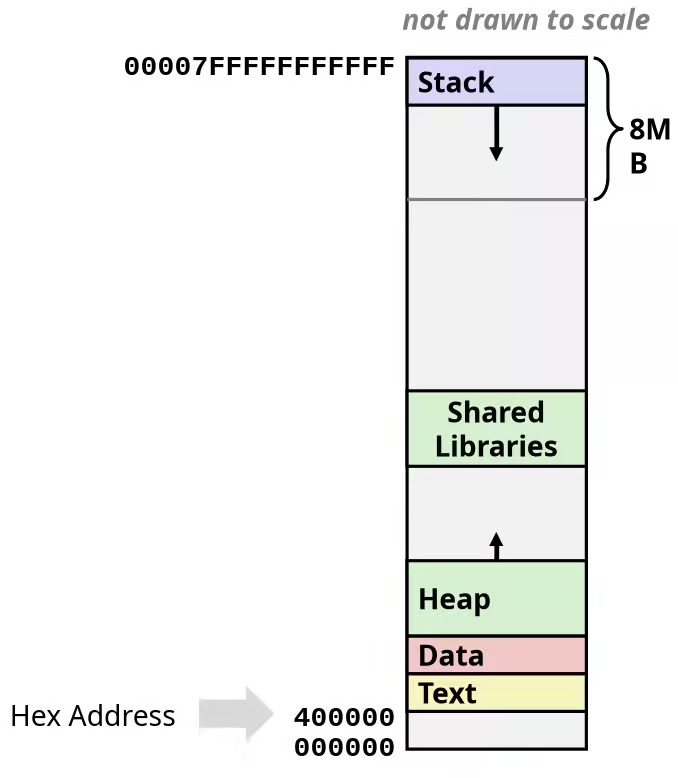

x86-64 Linux Memory Layout

- Stack

- Runtime stack (8MB limit, check by

limitcommand) - E.g., local variables

- Runtime stack (8MB limit, check by

- Heap

- Dynamically allocated as needed

- When call

malloc(),calloc(),new()

- Data

- Statically allocated data

- E.g., global vars,

staticvars, string constants

- Text / Shared Libraries

- Executable machine instructions

- Read-only

TIPIn common, the canonical address range in x86-64 is 47 bits address. That is why the maximum address show as

0x00007FFFFFFFFFFF.

Unions

Allocation

- Allocate according to largest element

- Can only use one field at a time

union U1 { char c; int i[2]; double v;} *up;

struct S1 { char c; int i[2]; double v;} *sp;

Program Optimization

-

There’s more to performance than asymptotic complexity

-

Must optimize at multiple levels: algorithm, data representations, procedures, and loops

Optimizing Compilers

- Provide efficient mapping of program to machine

- register allocation

- code selection and ordering (scheduling)

- dead code elimination

- eliminating minor inefficiencies

- Don’t (usually) improve asymptotic efficiency

- up to programmer to select best overall algorithm

- Big-O savings are (often) more important than constant factors

- but constant factors also matter

- Have difficulty overcoming “optimization blockers”

- potential memory aliasing

- potential procedure side-effects

Limitations of Optimizing Compilers

- Operate under fundamental constraint

- Must not cause any change in program behavior

- Except, possibly when program making use of nonstandard language features

- Often prevents it from making optimizations that would only affect behavior under pathological conditions.

- Must not cause any change in program behavior

- Behavior that may be obvious to the programmer can be obfuscated by languages and coding styles

- E.g., Data ranges may be more limited than variable types suggest

- Most analysis is performed only within procedures

- Whole-program analysis is too expensive in most cases

- Newer versions of GCC do interprocedural analysis within individual files

- But, not between code in different files

- Most analysis is based only on static information

- Compiler has difficulty anticipating run-time inputs

- When in doubt, the compiler must be conservative

Generally Useful Optimizations

Optimizations that you or the compiler should do regardless of processor / compiler

- Code Motion

- Reduce frequency with which computation performed

- If it will always produce same result

- Especially moving code out of loop

- Reduce frequency with which computation performed

void set_row(double *a, double *b, long i, long n) { long j; for (j = 0; j < n; j++) a[n*i+j] = b[j];}void set_row(double *a, double *b, long i, long n) { long j; int ni = n*i; for (j = 0; j < n; j++) a[ni+j] = b[j];}Compiler-Generated Code Motion (-O1)

void set_row(double *a, double *b, long i, long n) { long j; for (j = 0; j < n; j++) a[n*i+j] = b[j];}set_row: testq %rcx, %rcx # Test n jle .L1 # If 0, goto done imulq %rcx, %rdx # ni = n*i leaq (%rdi , %rdx, 8), %rdx # rowp = A + ni*8 movl $0, %eax # j = 0.L3: # loop: movsd (%rsi, %rax, 8), %xmm0 # t = b[j] movsd %xmm0, (%rdx, %rax, 8) # M[A + ni*8 + j*8] = t addq $1, %rax # j++ cmpq %rcx, %rax # j:n jne .L3 # if !=, goto loop.L1: # done: rep ; retTo the C code:

void set_row(double *a, double *b, long i, long n) { long j; long ni = n*i; double *rowp = a+ni; for (j = 0; j < n; j++) *rowp++ = b[j];}Reduction in Strength

- Replace costly operation with simpler one

- Shift, add instead of multiply or divide

- Utility machine dependent

- Depends on cost of multiply or divide instruction

- Recognize sequence of products

for (i = 0; i < n; i++) { int ni = n*i; for (j = 0; j < n; j++) a[ni + j] = b[j];}int ni = 0;for (i = 0; i < n; i++) { for (j = 0; j < n; j++) a[ni + j] = b[j]; ni += n;}Share Common Subexpressions

- Reuse portions of expressions

- GCC will do this with

–O1

up = val[(i-1)*n + j ];down = val[(i+1)*n + j ];left = val[i*n + j-1];right = val[i*n + j+1];sum = up + down + left + right;leaq 1(%rsi), %rax # i+1leaq -1(%rsi), %r8 # i-1imulq %rcx, %rsi # i*nimulq %rcx, %rax # (i+1)*nimulq %rcx, %r8 # (i-1)*naddq %rdx, %rsi # i*n+jaddq %rdx, %rax # (i+1)*n+jaddq %rdx, %r8 # (i-1)*n+j- 3 multiplications:

i*n,(i–1)*n,(i+1)*n

long inj = i*n + j;up = val[inj - n];down = val[inj + n];left = val[inj - 1];right = val[inj + 1];sum = up + down + left + right;imulq %rcx, %rsi # i*naddq %rdx, %rsi # i*n+jmovq %rsi, %rax # i*n+jsubq %rcx, %rax # i*n+j-nleaq (%rsi, %rcx), %rcx # i*n+j+n- 1 multiplication:

i*n

Optimization Blockers

NOTEI’m just skipping this chapter, so the notes are seems disordered. Will retake this chapter later.

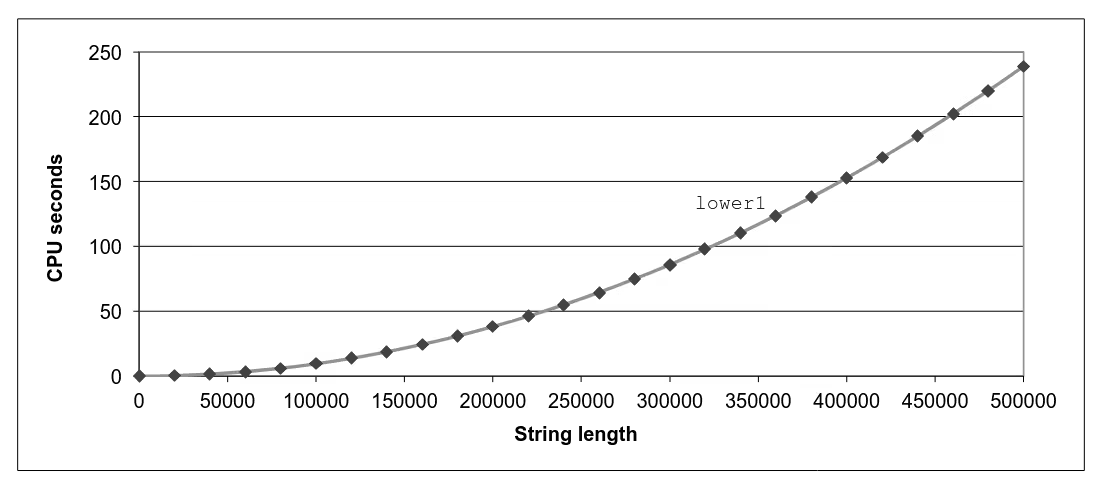

Optimization Blocker: Procedure Calls

void lower(char *s) { size_t i; for (i = 0; i < strlen(s); i++) if (s[i] >= 'A' && s[i] <= 'Z') s[i] -= ('A' - 'a');}strlenexecuted every iteration- Time quadruples when double string length

- Quadratic performance

Calling strlen

/* My version of strlen */size_t strlen(const char *s) { size_t length = 0; while (*s != '\0') { s++; length++; } return length;}- strlen performance

- Only way to determine length of string is to scan its entire length, looking for null character

- Overall performance, string of length N

- N calls to strlen

- Require times

N,N-1,N‐2, …,1 - Overall performance

Improving Performance

void lower(char *s) { size_t i; size_t len = strlen(s); for (i = 0; i < len; i++) if (s[i] >= 'A' && s[i] <= 'Z') s[i] -= ('A' - 'a');}- Move call to

strlenoutside of loop - Since result does not change from one iteration to another

- Form of code motion

Improved Lower Case Conversion Performance

Why couldn’t compiler move strlen out of inner loop ?

- Procedure may have side effects

- Alters global state each time called

- Function may not return same value for given arguments

- Depends on other parts of global state

- Procedure lower could interact with

strlen

- Warning

- Compiler treats procedure call as a black box

- Weak optimizations near them

- Remedies

- Use of inline functions

- GCC does this with

–O1within single file

- GCC does this with

- Do your own code motion

- Use of inline functions

Memory Matters

/* Sum rows is of n X n matrix a and store in vector b */void sum_rows1(double *a, double *b, long n) { long i, j; for (i = 0; i < n; i++) { b[i] = 0; for (j = 0; j < n; j++) b[i] += a[i*n + j]; }}# sum_rows1 inner loop.L4: movsd (%rsi, %rax, 8), %xmm0 # FP load addsd (%rdi), %xmm0 # FP add movsd %xmm0, (%rsi, %rax, 8) # FP store addq $8, %rdi cmpq %rcx, %rdi jne .L4Memory Aliasing

- Code updates

b[i]on every iteration - Must consider possibility that these updates will affect program behavior

Removing Aliasing

/* Sum rows is of n X n matrix a and store in vector b */void sum_rows2(double *a, double *b, long n) { long i, j; for (i = 0; i < n; i++) { double val = 0; for (j = 0; j < n; j++) val += a[i*n + j]; b[i] = val; }}# sum_rows2 inner loop.L10: addsd (%rdi), %xmm0 # FP load + add addq $8, %rdi cmpq %rax, %rdi jne .L10- No need to store intermediate results

Optimization Blocker: Memory Aliasing

- Aliasing

- Two different memory references specify single location

- Easy to have happen in C

- Since allowed to do address arithmetic

- Direct access to storage structures

- Get in habit of introducing local variables

- Accumulating within loops

- Your way of telling compiler not to check for aliasing

Exploiting Instruction‐Level Parallelism

TODO

Dealing with Conditionals

TODO

The Memory Hierarchy

TODO

Cache Memories

TODO

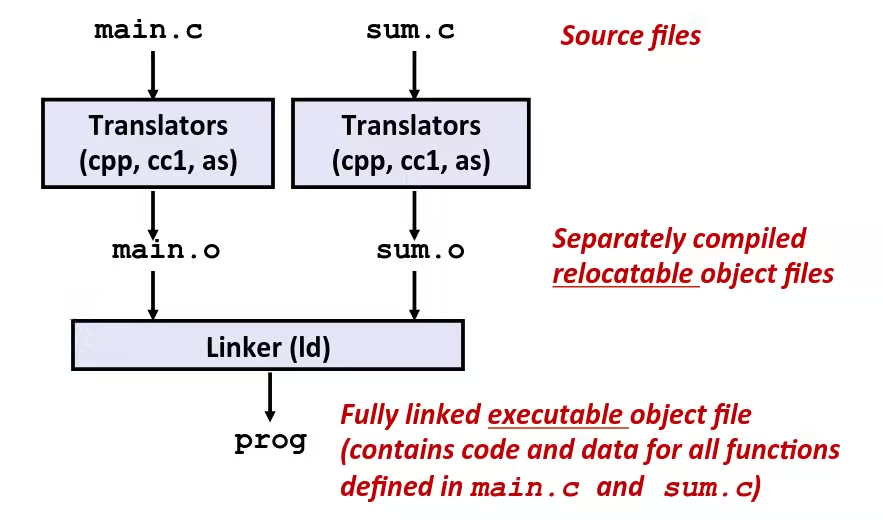

Linking

Static Linking

Programs are translated and linked using a compiler driver: gcc -Og -o prog main.c sum.c

Why Linkers ?

- Reason 1: Modularity

- Program can be written as a collection of smaller source files, rather than one monolithic mass

- Can build libraries of common functions

- E.g., Math library, Standard C library

- Reason 2: Efficiency

- Time: Separate compilation

- Change one source file, compile, and then relink

- No need to recompile other source files

- Space: Libraries

- Common functions can be aggregated into a single file

- Yet executable files and running memory images contain only code for the functions they actually use

- Time: Separate compilation

What Do Linkers Do ?

- Step 1: Symbol resolution

- Programs define and reference symbols (global variables and functions)

void swap() { ... } /* define symbol swap */swap(); /* reference symbol swap */int *xp = &x; /* define symbol xp, reference x */

- Symbol definitions are stored in object file (by assembler) in symbol table

- Symbol table is an array of

structs - Each entry includes name, size, and location of symbol

- Symbol table is an array of

- During symbol resolution step, the linker associates each symbol reference with exactly one symbol definition

- Programs define and reference symbols (global variables and functions)

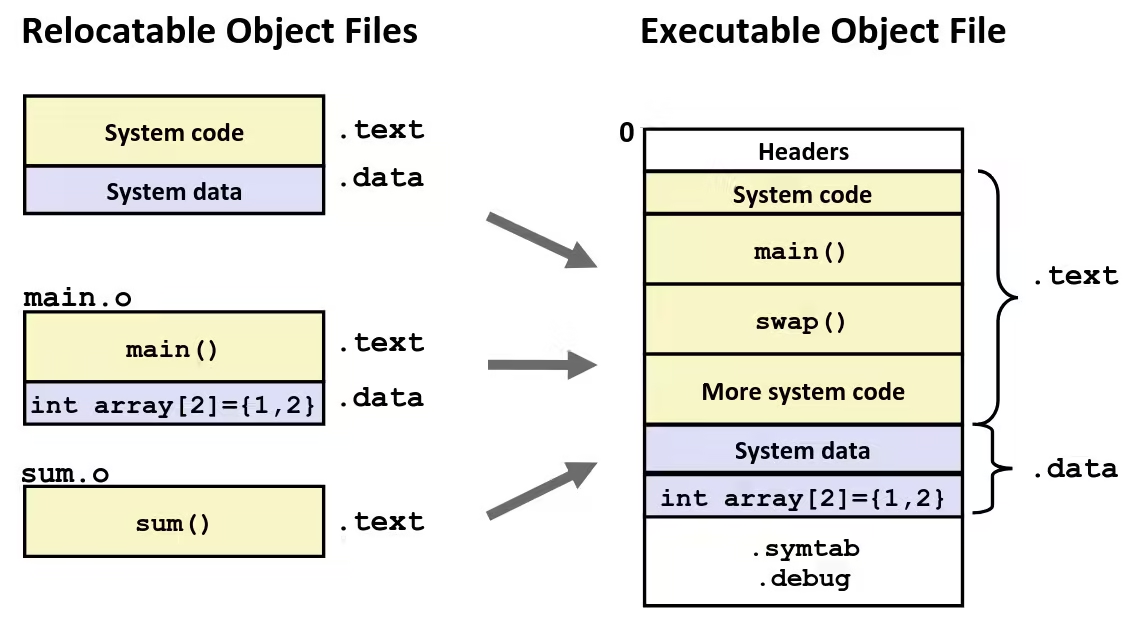

- Step 2: Relocation

- Merges separate code and data sections into single sections

- Relocates symbols from their relative locations in the

.ofiles to their final absolute memory locations in the executable - Updates all references to these symbols to reflect their new positions

Three Kinds of Object Files (Modules)

- Relocatable object file (

.ofile)- Contains code and data in a form that can be combined with other relocatable object files to form executable object file

- Each

.ofile is produced from exactly one source (.c) file

- Each

- Contains code and data in a form that can be combined with other relocatable object files to form executable object file

- Executable object file (

a.outfile)- Contains code and data in a form that can be copied directly into memory and then executed

- Shared object file (

.sofile)- Special type of relocatable object file that can be loaded into memory and linked dynamically, at either load time or run-time

- Called Dynamic Link Libraries (DLLs) by Windows

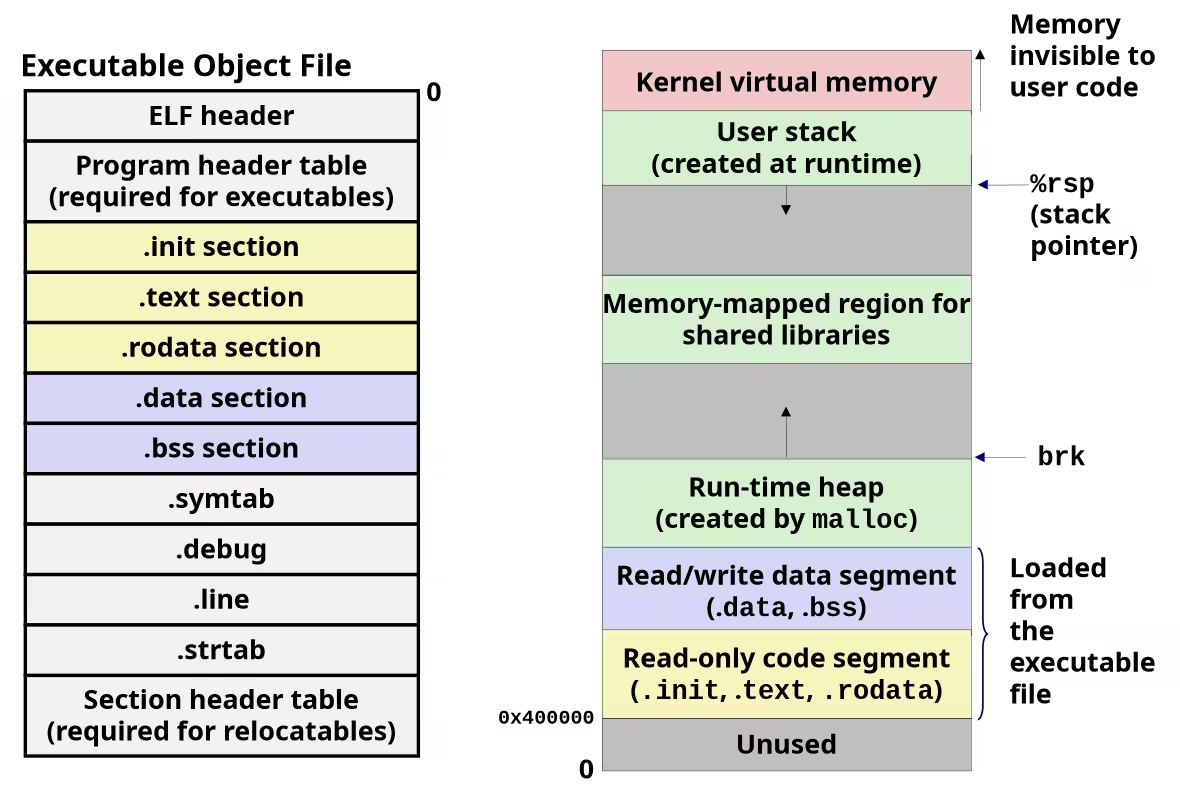

Executable and Linkable Format (ELF)

- Standard binary format for object files

- One unified format for

- Relocatable object files (

.o) - Executable object files (

a.out) - Shared object files (

.so)

- Relocatable object files (

- Generic name: ELF binaries

ELF Object File Format

- ELF header

- Word size, byte ordering, file type (

.o,exec,.so), machine type, etc

- Word size, byte ordering, file type (

- Segment header table (required for executables)

- Page size, virtual addresses memory segments (sections), segment sizes

.textsection- Code

.rodatasection- Read only data: jump tables, …

.datasection- Initialized global variables

.bsssection- Global variables that are uninitialized or initialized to zero

- Block Started by Symbol

- “Better Save Space”

- Has section header but occupies no space

.symtabsection- Symbol table

- Procedure and static variable names

- Section names and locations

.rel.textsection- Relocation info for

.textsection - Addresses of instructions that will need to be modified in the executable

- Instructions for modifying

- Relocation info for

.debugsection- Info for symbolic debugging (

gcc -g)

- Info for symbolic debugging (

- Section header table

- Offsets and sizes of each section

Linker Symbols

- Global symbols

- Symbols defined by module m that can be referenced by other modules

- E.g.: non-static C functions and non-static global variables

- External symbols

- Global symbols that are referenced by module m but defined by some other module

- Local symbols

- Symbols that are defined and referenced exclusively by module m

- E.g.: C functions and global variables defined with the static attribute

- Local linker symbols are not local program variables

Local non-static C variables vs. local static C variables

- Local non-static C variables stored on the stack

- Local static C variables stored in either

.bss, or.data

int f() { static int x = 0; /* .bss */ return x;}

int g() { static int x = 1; /* .data */ return x;}In the case above, compiler allocates space in .data for each definition of x and creates local symbols in the symbol table with unique names, e.g., x.1 and x.2.

IMPORTANTLocal static variables are only initialized at first time encountered, calls after won’t reinitialize.

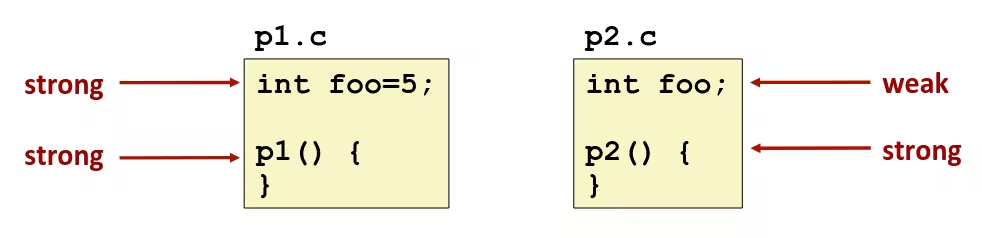

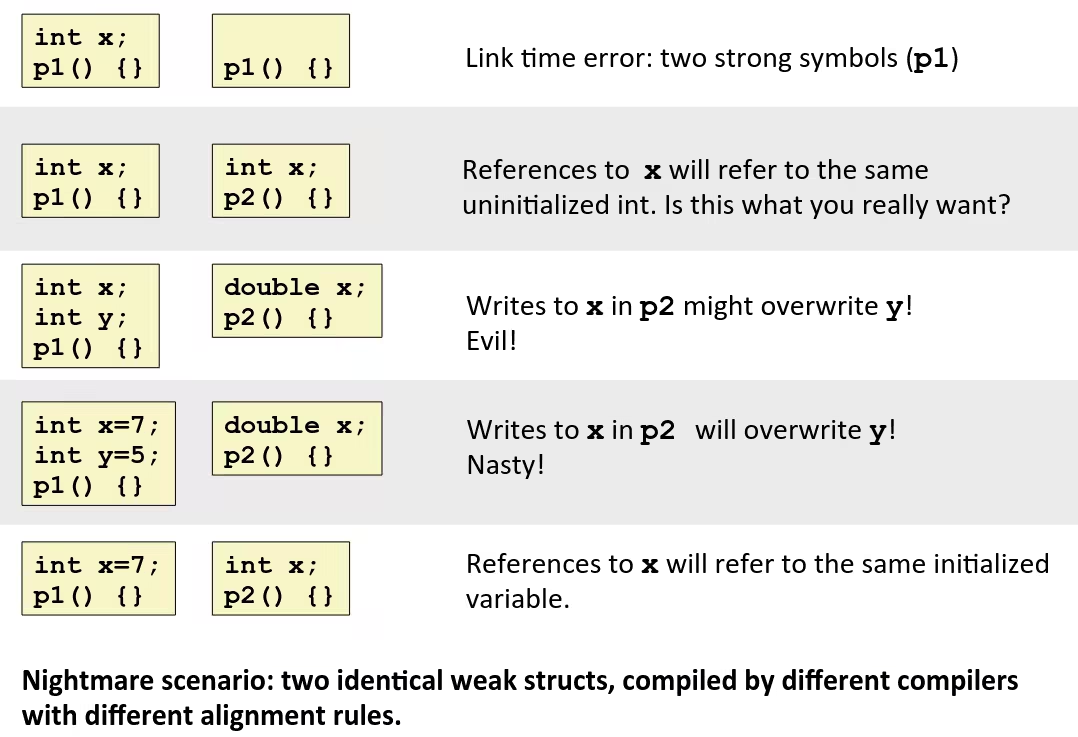

How Linker Resolves Duplicate Symbol Definitions

- Program symbols are either

strongorweak- Strong: procedures and initialized globals

- Weak: uninitialized globals

Linker’s Symbol Rules

- Rule 1: Multiple strong symbols are not allowed

- Each item can be defined only once

- Otherwise: Linker error

- Rule 2: Given a strong symbol and multiple weak symbols, choose the strong symbol

- References to the weak symbol resolve to the strong symbol

- Rule 3: If there are multiple weak symbols, pick an arbitrary one

- Can override this with

gcc –fno-common

- Can override this with

Global Variables

- Avoid if you can

- Otherwise

- Use

staticif you can - Initialize if you define a global variable

- Use

externif you reference an external global variable

- Use

Relocation

Relocation Entries

int array[2] = {1, 2};

int main() { int val = sum(array, 2); return val;}0000000000000020 <main>: 20: be 02 00 00 00 mov $0x2,%esi 25: 48 8d 3d 00 00 00 00 lea 0x0(%rip),%rdi # 2c <main+0xc> 28: R_X86_64_PC32 array-0x4 2c: e8 00 00 00 00 call 31 <main+0x11> 2d: R_X86_64_PLT32 sum-0x4 31: c3 retRelocated .text section

0000000000001120 <sum>: 1120: ba 00 00 00 00 mov $0x0,%edx 1125: b8 00 00 00 00 mov $0x0,%eax 112a: eb 0d jmp 1139 <sum+0x19> 112c: 0f 1f 40 00 nopl 0x0(%rax) 1130: 48 63 c8 movslq %eax,%rcx 1133: 03 14 8f add (%rdi,%rcx,4),%edx 1136: 83 c0 01 add $0x1,%eax 1139: 39 f0 cmp %esi,%eax 113b: 7c f3 jl 1130 <sum+0x10> 113d: 89 d0 mov %edx,%eax 113f: c3 ret

0000000000001140 <main>: 1140: be 02 00 00 00 mov $0x2,%esi 1145: 48 8d 3d c4 2e 00 00 lea 0x2ec4(%rip),%rdi # 4010 <array> 114c: e8 cf ff ff ff call 1120 <sum> 1151: c3 retUsing PC-relative addressing for sum: 0x1120 = 0x1151 + 0xffffffcf

Loading Executable Object Files

Packaging Commonly Used Functions

- How to package functions commonly used by programmers ?

- Math, I/O, memory management, string manipulation, etc

- Awkward, given the linker framework so far:

- Option 1: Put all functions into a single source file

- Programmers link big object file into their programs

- Space and time inefficient

- Option 2: Put each function in a separate source file

- Programmers explicitly link appropriate binaries into their programs

- More efficient, but burdensome on the programmer

- Option 1: Put all functions into a single source file

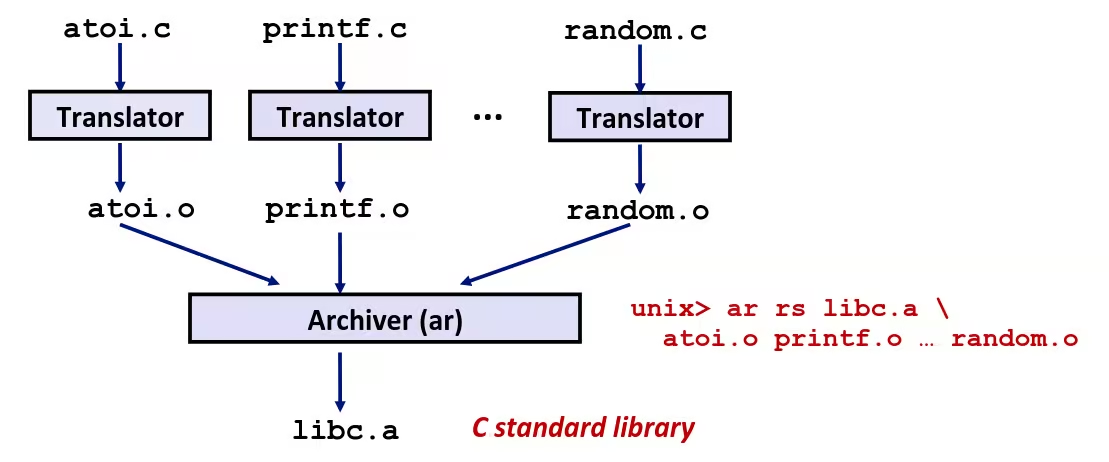

Old-fashioned Solution: Static Libraries

- Static libraries (

.aarchive files)- Concatenate related relocatable object files into a single file with an index (called an archive)

- Enhance linker so that it tries to resolve unresolved external references by looking for the symbols in one or more archives

- If an archive member file resolves reference, link it into the executable

Creating Static Libraries

- Archiver allows incremental updates

- Recompile function that changes and replace

.ofile in archive

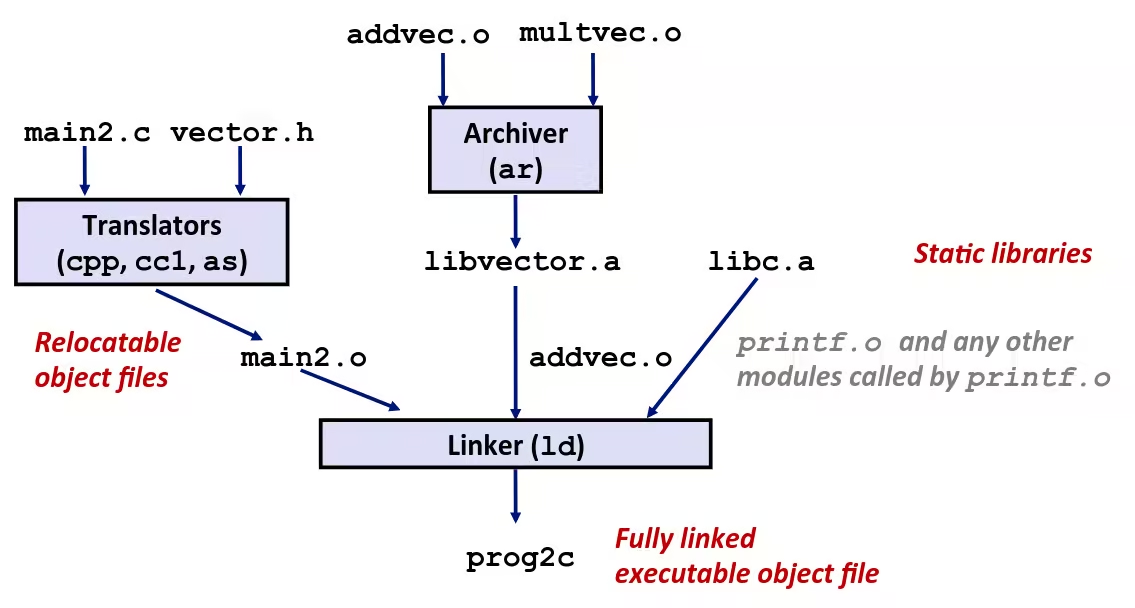

Linking with Static Libraries

Using Static Libraries

- Linker’s algorithm for resolving external references

- Scan

.ofiles and.afiles in the command line order - During the scan, keep a list of the current unresolved references

- As each new

.oor.afile, obj, is encountered, try to resolve each unresolved reference in the list against the symbols defined in obj - If any entries in the unresolved list at end of scan, then error

- Scan

- Problem

- Command line order matters!

- Moral: put libraries at the end of the command line

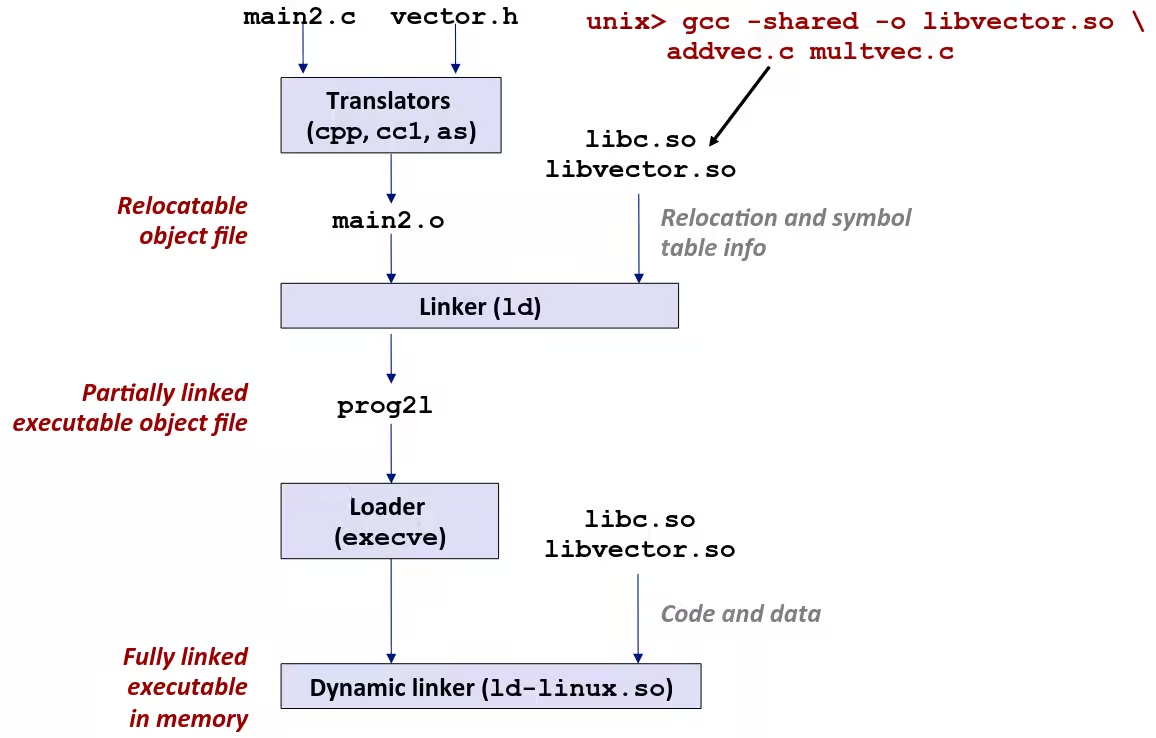

unix> gcc -L. libtest.o -lmineunix> gcc -L. -lmine libtest.olibtest.o: In function `main':libtest.o(.text+0x4): undefined reference to `libfun'Shared Libraries

-

Static libraries have the following disadvantages

- Duplication in the stored executables (every function needs libc)

- Duplication in the running executables

- Minor bug fixes of system libraries require each application to explicitly relink

-

Modern solution: Shared Libraries

- Object files that contain code and data that are loaded and linked into an application dynamically, at either load-time or run-time

- Also called: dynamic link libraries, DLLs,

.sofiles

-

Dynamic linking can occur when executable is first loaded and run (load-time linking)

- Common case for Linux, handled automatically by the dynamic linker (

ld-linux.so) Standard C library (libc.so) usually dynamically linked

- Common case for Linux, handled automatically by the dynamic linker (

-

Dynamic linking can also occur after program has begun (run-time linking)

- In Linux, this is done by calls to the

dlopen()interface- Distributing software

- High-performance web servers

- Runtime library interpositioning

- In Linux, this is done by calls to the

-

Shared library routines can be shared by multiple processes

Dynamic Linking at Load-time

Dynamic Linking at Run-time

#include <stdio.h>#include <stdlib.h>#include <dlfcn.h>

int x[2] = {1, 2};int y[2] = {3, 4};int z[2];

int main() { void *handle; void (*addvec)(int *, int *, int *, int); char *error;

/* Dynamically load the shared library that contains addvec() */ handle = dlopen("./libvector.so", RTLD_LAZY); if (!handle) { fprintf(stderr, "%s\n", dlerror()); exit(1); }

/* Get a pointer to the addvec() function we just loaded */ addvec = dlsym(handle, "addvec"); if ((error = dlerror()) != NULL) { fprintf(stderr, "%s\n", error); exit(1); }

/* Now we can call addvec() just like any other function */ addvec(x, y, z, 2); printf("z = [%d %d]\n", z[0], z[1]);

/* Unload the shared library */ if (dlclose(handle) < 0) { fprintf(stderr, "%s\n", dlerror()); exit(1); } return 0;}Library Interpositioning

- Its a powerful linking technique that allows programmers to intercept calls to arbitrary functions

- Interpositioning can occur at:

- Compile time: When the source code is compiled

- Link time: When the relocatable object files are statically linked to form an executable object file

- Load/Run time: When an executable object file is loaded into memory, dynamically linked, and then executed

Example Program

#include <stdio.h>#include <malloc.h>

int main() { int *p = malloc(32); free(p); return(0);}- Goal: trace the addresses and sizes of the allocated and freed blocks, without breaking the program, and without modifying the source code

- Three solutions: interpose on the lib

mallocandfreefunctions at compile time, link time, and load/run time

Compile-time Interpositioning

#ifdef COMPILETIME#include <stdio.h>#include <malloc.h>

/* malloc wrapper function */void *mymalloc(size_t size) { void *ptr = malloc(size); printf("malloc(%d)=%p\n", (int)size, ptr); return ptr;}

/* free wrapper function */void myfree(void *ptr) { free(ptr); printf("free(%p)\n", ptr);}#endif#define malloc(size) mymalloc(size)#define free(ptr) myfree(ptr)

void *mymalloc(size_t size);void myfree(void *ptr);linux> make intcgcc -Wall -DCOMPILETIME -c mymalloc.cgcc -Wall -I. -o intc int.c mymalloc.olinux> make runc./intcmalloc(32)=0x1edc010free(0x1edc010)linux>Link-time Interpositioning

#ifdef LINKTIME#include <stdio.h>

void *__real_malloc(size_t size);void __real_free(void *ptr);

/* malloc wrapper function */void *__wrap_malloc(size_t size) { void *ptr = __real_malloc(size); /* Call libc malloc */ printf("malloc(%d) = %p\n", (int)size, ptr); return ptr;}

/* free wrapper function */void __wrap_free(void *ptr) { __real_free(ptr); /* Call libc free */ printf("free(%p)\n", ptr);}#endiflinux> make intlgcc -Wall -DLINKTIME -c mymalloc.cgcc -Wall -c int.cgcc -Wall -Wl,--wrap,malloc -Wl,--wrap,free -o intl int.o mymalloc.olinux> make runl./intlmalloc(32) = 0x1aa0010free(0x1aa0010)linux>- The

-Wlflag passes argument to linker, replacing each comma with a space - The

--wrap,mallocarg instructs linker to resolve references in a special way:- Refs to

mallocshould be resolved as__wrap_malloc - Refs to

__real_mallocshould be resolved asmalloc

- Refs to

Load/Run time Interpositioning

#ifdef RUNTIME#define _GNU_SOURCE#include <stdio.h>#include <stdlib.h>#include <dlfcn.h>

/* malloc wrapper function */void *malloc(size_t size) { void *(*mallocp)(size_t size); char *error;

mallocp = dlsym(RTLD_NEXT, "malloc"); /* Get addr of libc malloc */ if ((error = dlerror()) != NULL) { fputs(error, stderr); exit(1); } char *ptr = mallocp(size); /* Call libc malloc */ printf("malloc(%d) = %p\n", (int)size, ptr); return ptr;}

/* free wrapper function */void free(void *ptr) { void (*freep)(void *) = NULL; char *error;

if (!ptr) return;

freep = dlsym(RTLD_NEXT, "free"); /* Get address of libc free */ if ((error = dlerror()) != NULL) { fputs(error, stderr); exit(1); } freep(ptr); /* Call libc free */ printf("free(%p)\n", ptr);}#endiflinux> make intrgcc -Wall -DRUNTIME -shared -fpic -o mymalloc.so mymalloc.c -ldlgcc -Wall -o intr int.clinux> make runr(LD_PRELOAD="./mymalloc.so" ./intr)malloc(32) = 0xe60010free(0xe60010)linux>- The

LD_PRELOADenvironment variable tells the dynamic linker to resolve unresolved refs (e.g., tomalloc) by looking inmymalloc.sofirst

Exceptional Control Flow

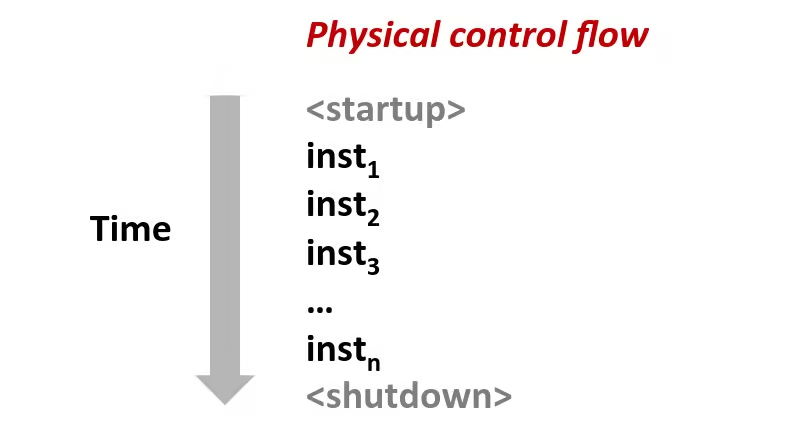

Control Flow

- Processors do only one thing:

- From startup to shutdown, a CPU simply reads and executes (interprets) a sequence of instructions, one at a time

- This sequence is the CPU’s control flow (or flow of control)

Up to now, we have learned two mechanisms for changing control flow:

- Jumps and branches

- Call and return

They react to changes in program state.

But its insufficient for a useful system: difficult to react to changes in system state:

- Data arrives from a disk or a network adapter

- Instruction divides by zero

- User hits

Ctrl-Cat the keyboard - System timer expires

That’s why we need mechanisms for “exceptional control flow”.

Exceptional Control Flow

- Exists at all levels of a computer system

- Low level mechanisms

- Exceptions

- Change in control flow in response to a system event (i.e., change in system state)

- Implemented using combination of hardware and OS software

- Exceptions

- Higher level mechanisms

- Process context switch

- Implemented by OS software and hardware timer

- Signals

- Implemented by OS software

- Nonlocal jumps:

setjmp()andlongjmp()- Implemented by C runtime library

- Process context switch

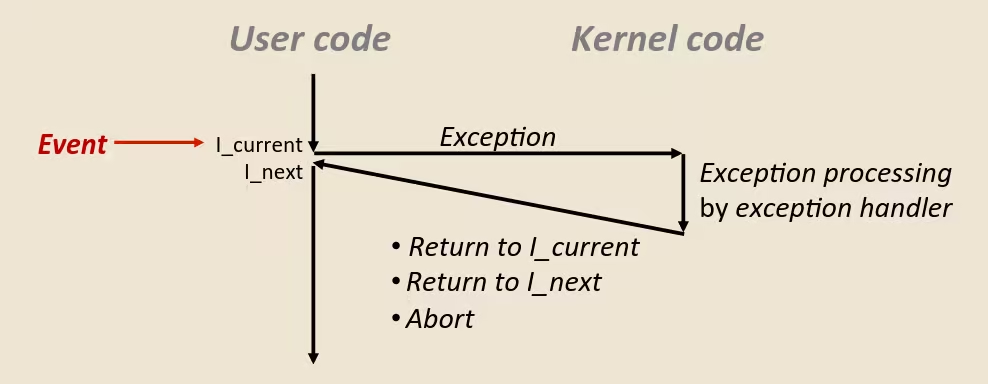

Exceptions

- An exception is a transfer of control to the OS kernel in response to some event (i.e., change in processor state)

- Kernel is the memory-resident part of the OS

- Examples of events: Divide by 0, arithmetic overflow, page fault, I/O request completes, typing

Ctrl‐C

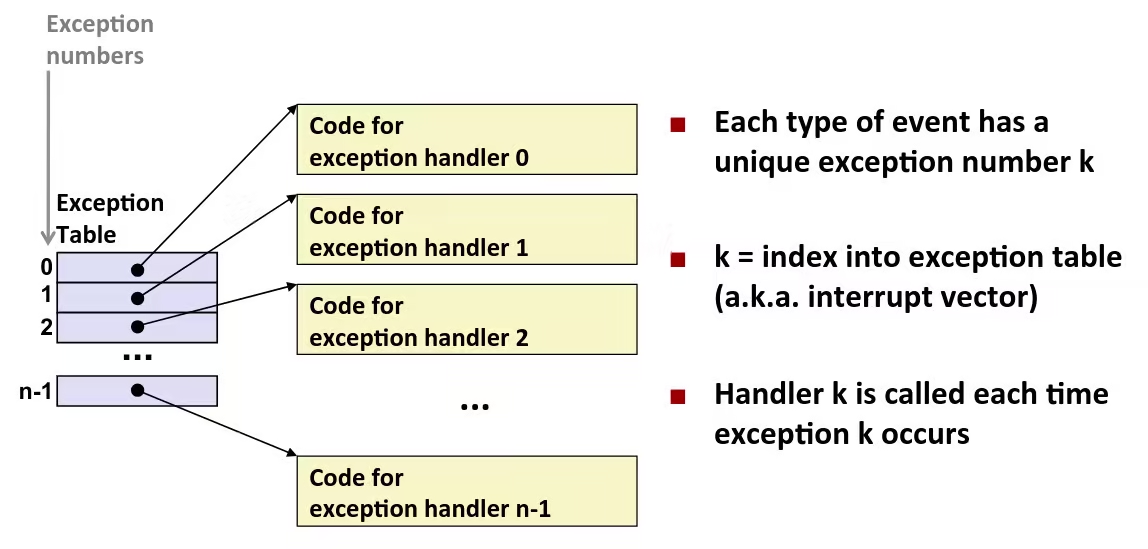

Exception Tables

Synchronous Exceptions

- Caused by events that occur as a result of executing an instruction

- Traps

- Intentional (e.g., system calls, breakpoint traps, special instructions)

- Returns control to “next” instruction

- Faults

- Unintentional but possibly recoverable (e.g., page faults (recoverable), protection faults (unrecoverable), floating point exceptions)

- Either re-executes faulting (“current”) instruction or aborts

- Aborts

- Unintentional and unrecoverable (e.g., illegal instruction, parity error, machine check)

- Aborts current program

- Traps

Asynchronous Exceptions (Interrupts)

- Caused by events external to the processor

- Indicated by setting the processor’s interrupt pin

- Handler returns to “next” instruction

- Examples:

- Timer interrupt

- Every few ms, an external timer chip triggers an interrupt

- Used by the kernel to take back control from user programs

- I/O interrupt from external device

- Hitting

Ctrl-Cat the keyboard - Arrival of a packet from a network

- Arrival of data from a disk

- Hitting

- Timer interrupt

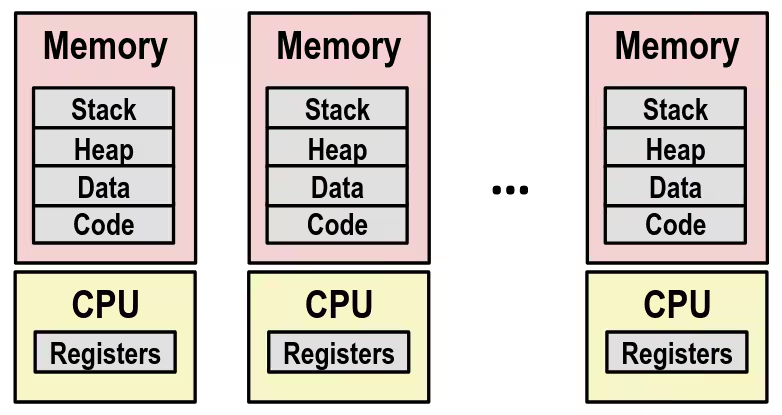

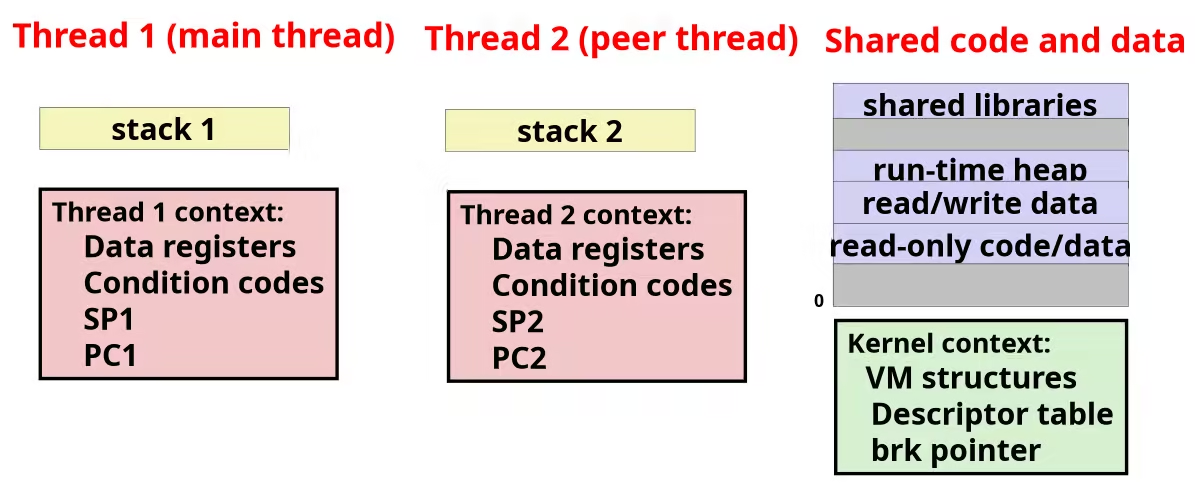

Processes

- Definition: A process is an instance of a running program

- One of the most profound ideas in computer science

- Not the same as “program” or “processor”

- Process provides each program with two key abstractions:

- Logical control flow

- Each program seems to have exclusive use of the CPU

- Provided by kernel mechanism called context switching

- Private address space

- Each program seems to have exclusive use of main memory

- Provided by kernel mechanism called virtual memory

- Logical control flow

Multiprocessing: The Illusion

- Computer runs many processes simultaneously

- Applications for one or more users

- Web browsers, email clients, editors, …

- Background tasks

- Monitoring network & I/O devices

- Applications for one or more users

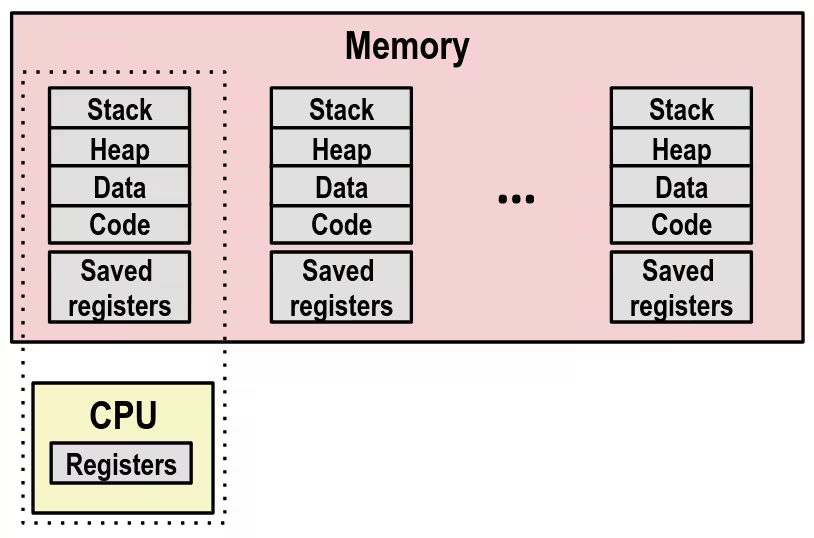

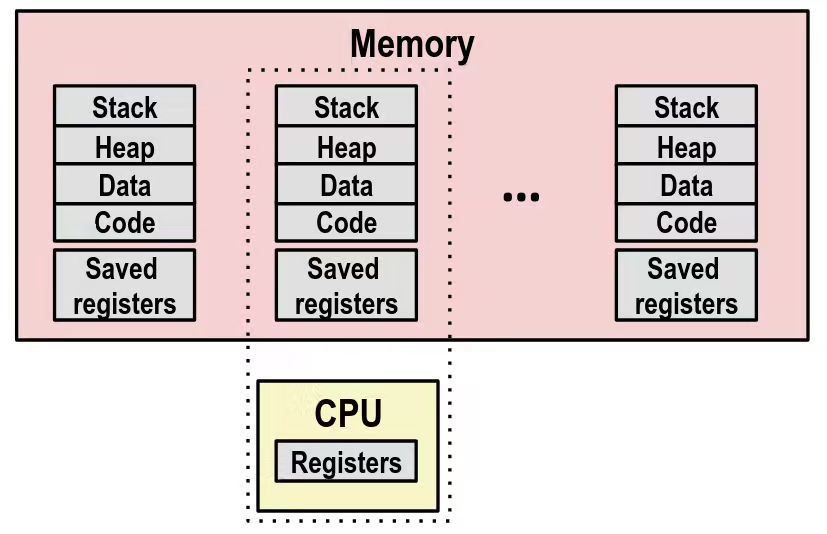

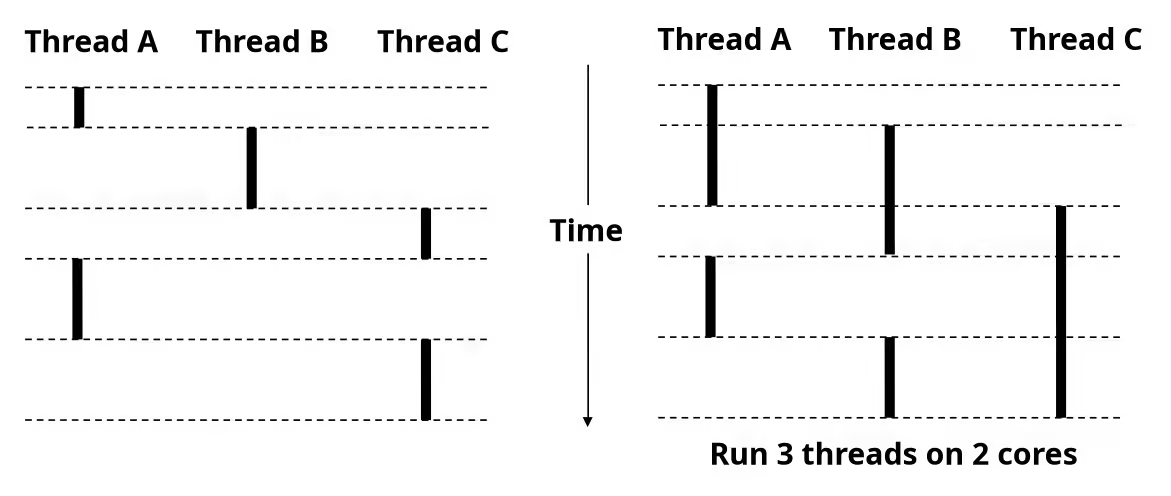

Multiprocessing: The (Traditional) Reality

- Single processor executes multiple processes concurrently

- Process executions interleaved (multitasking)

- Address spaces managed by virtual memory system

- Register values for non-executing processes saved in memory

- Save current registers in memory

- Schedule next process for execution

- Load saved registers and switch address space (context switch)

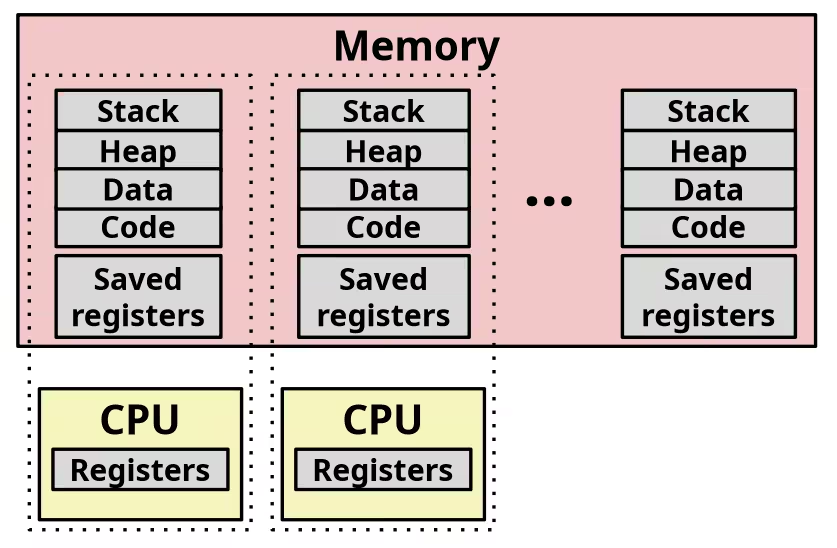

Multiprocessing: The (Modern) Reality

- Multicore processors

- Multiple CPUs on single chip

- Share main memory (and some of the caches)

- Each can execute a separate process

- Scheduling of processors onto cores done by kernel

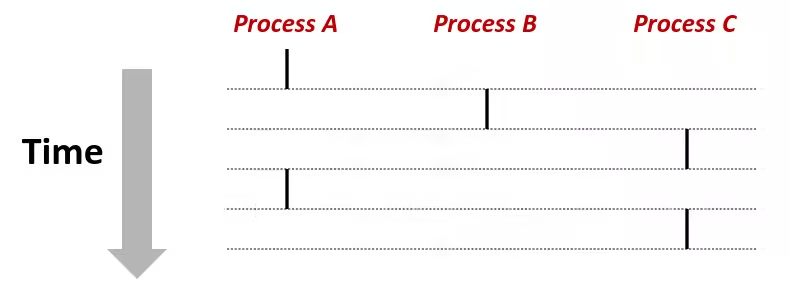

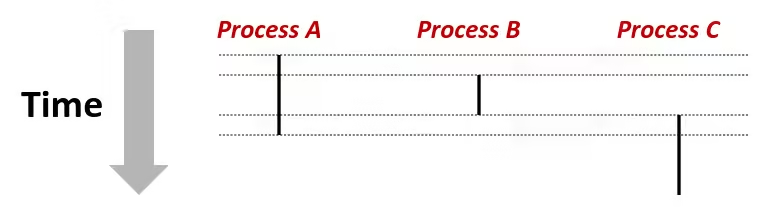

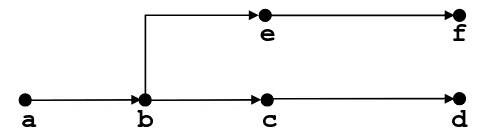

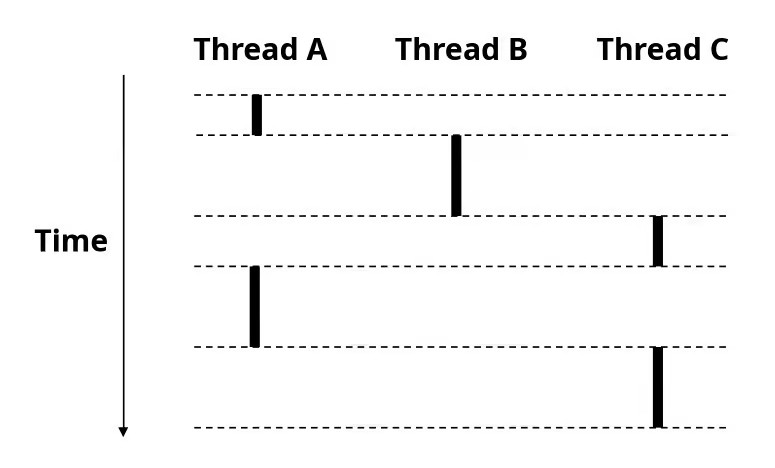

Concurrent Processes

- Each process is a logical control flow

- Two processes run concurrently (are concurrent) if their flows overlap in time

- Otherwise, they are sequential

- Examples (running on single core):

- Concurrent: A & B, A & C

- Sequential: B & C

User View of Concurrent Processes

- Control flows for concurrent processes are physically disjoint in time

- However, we can think of concurrent processes as running in parallel with each other

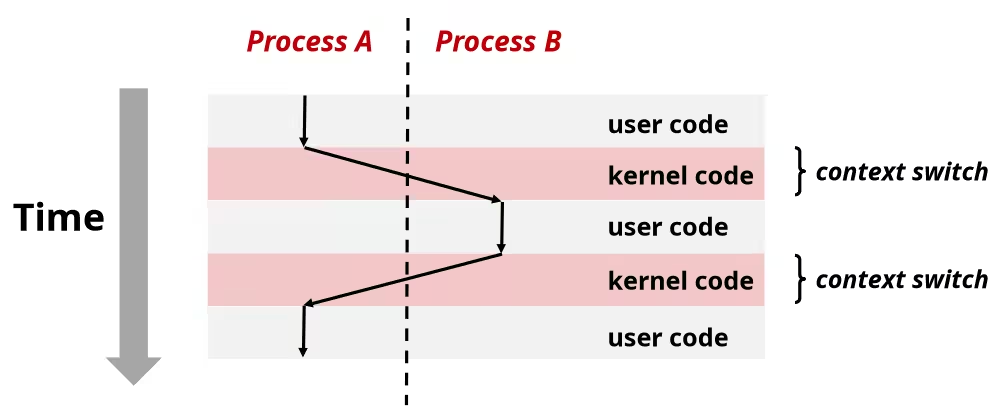

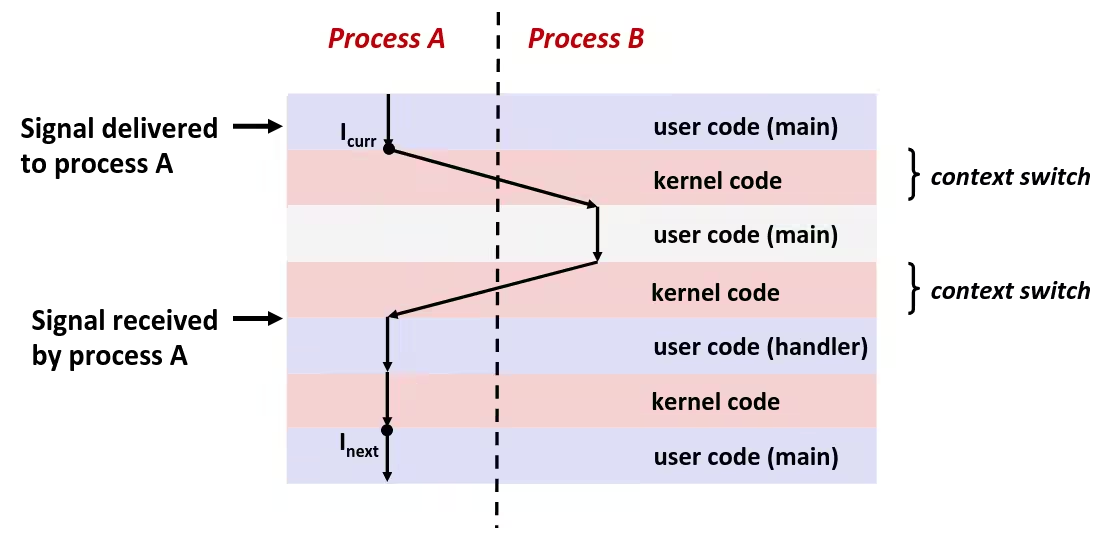

Context Switching

- Processes are managed by a shared chunk of memory-resident OS code called the kernel

- Important: the kernel is not a separate process, but rather runs as part of some existing process

- Control flow passes from one process to another via a context switch

Process Control

System Call Error Handling

- On error, Linux system-level functions typically return

‐1and set global variableerrnoto indicate cause - Hard and fast rule:

- You must check the return status of every system-level function

- Only exception is the handful of functions that return

void

if ((pid = fork()) < 0) { fprintf(stderr, "fork error: %s\n", strerror(errno)); exit(0);}Error-reporting functions

- Can simplify somewhat using an error-reporting function:

/* Unix-style error */void unix_error(char *msg) { fprintf(stderr, "%s: %s\n", msg, strerror(errno)); exit(0);}

if ((pid = fork()) < 0) unix_error("fork error");Error-handling Wrappers

- Simplify the present code even further by using Stevens-style error-handling wrappers:

pid_t Fork(void) { pid_t pid;

if ((pid = fork()) < 0) unix_error("Fork error"); return pid;}

pid = Fork();Processes States

From a programmer’s perspective, we can think of a process as being in one of three states:

- Running

- Process is either executing, or waiting to be executed and will eventually be scheduled (i.e., chosen to execute) by the kernel

- Stopped

- Process execution is suspended and will not be scheduled until further notice

- Terminated

- Process is stopped permanently

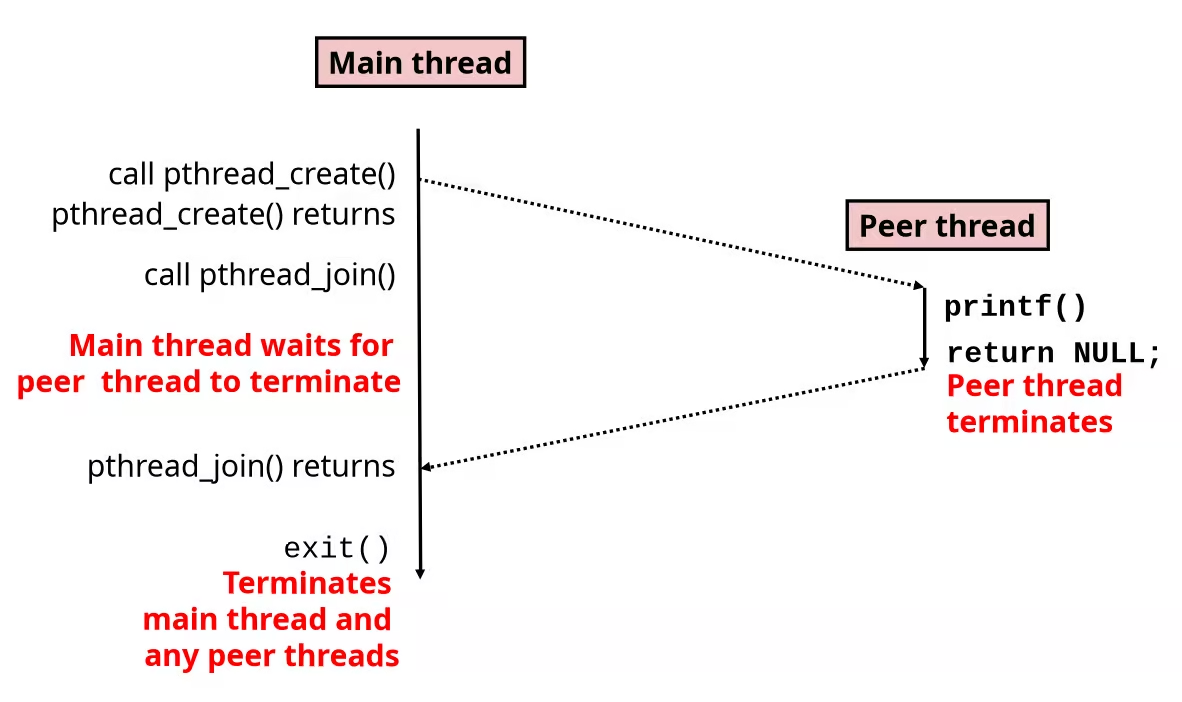

Creating Processes

- Parent process creates a new running child process by calling

fork int fork(void)- Returns

0to the child process, child’s PID to parent process - Child is almost identical to parent:

- Child get an identical (but separate) copy of the parent’s virtual address space

- Child gets identical copies of the parent’s open file descriptors

- Child has a different PID than the parent

- Returns

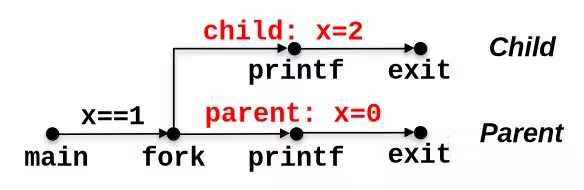

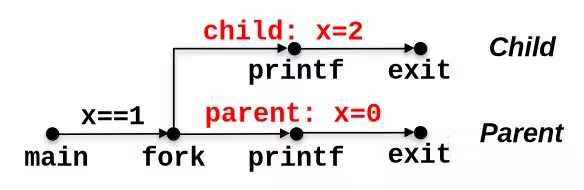

forkis interesting (and often confusing) because it is called once but returns twice

fork Example

int main() { pid_t pid; int x = 1;

pid = Fork(); if (pid == 0) { /* Child */ printf("child: x=%d\n", ++x); exit(0); }

/* Parent */ printf("parent: x=%d\n", --x); exit(0);}linux> ./forkparent: x=0child: x=2- Call once, return twice

- Concurrent execution

- Can’t predict execution order of parent and child

- Duplicate but separate address space

xhas a value of 1 when fork returns in parent and child- Subsequent changes to

xare independent

- Shared open files

stdoutis the same in both parent and child

Terminating Processes

- Process becomes terminated for one of three reasons:

- Receiving a signal whose default action is to terminate

- Returning from the

mainroutine - Calling the

exitfunction

void exit(int status)- Terminates with an exit status of status

- Convention: normal return status is

0, nonzero on error - Another way to explicitly set the exit status is to return an integer value from the main routine

exitis called once but never returns

Modeling fork with Process Graphs

- A process graph is a useful tool for capturing the partial ordering of statements in a concurrent program:

- Each vertex is the execution of a statement

a ‐> bmeansahappens beforeb- Edges can be labeled with current value of variables

printfvertices can be labeled with output- Each graph begins with a vertex with no inedges

- Any topological sort of the graph corresponds to a feasible total ordering

- Total ordering of vertices where all edges point from left to right

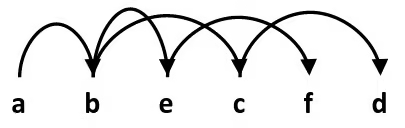

Process Graph Example

int main() { pid_t pid; int x = 1;

pid = Fork(); if (pid == 0) { /* Child */ printf("child: x=%d\n", ++x); exit(0); }

/* Parent */ printf("parent: x=%d\n", --x); exit(0);}

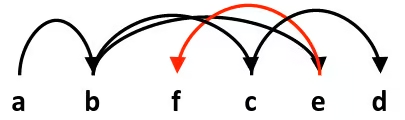

Interpreting Process Graphs

- Original graph:

- Relabled graph:

- Feasible total ordering:

- Infeasible total ordering:

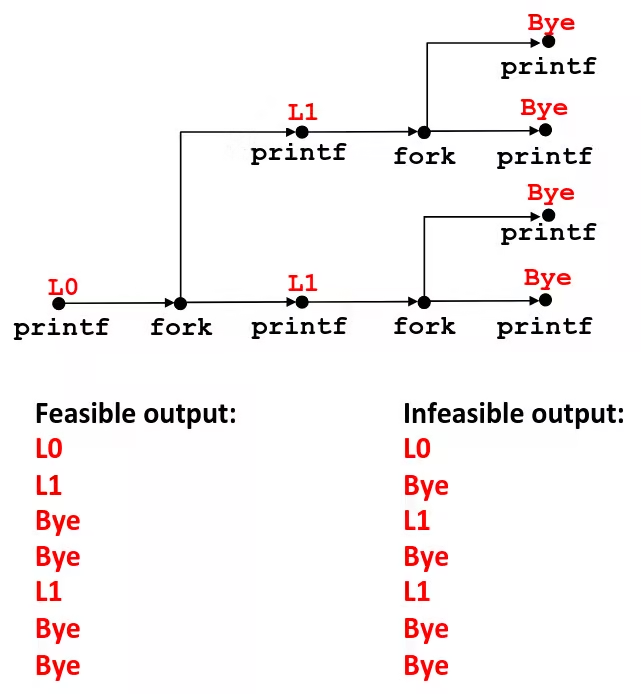

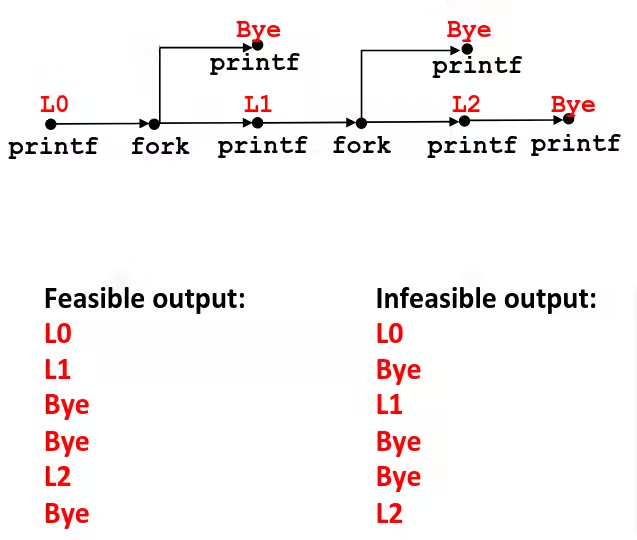

fork Example: Two consecutive forks

void fork2() { printf("L0\n"); fork(); printf("L1\n"); fork(); printf("Bye\n");}

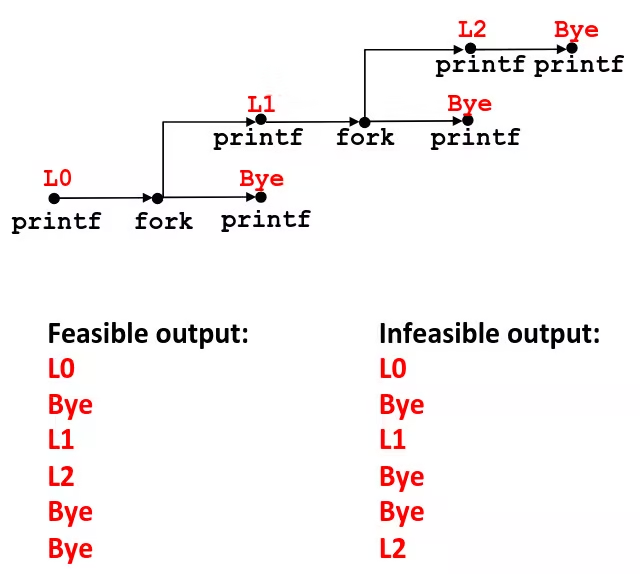

fork Example: Nested forks in parent

void fork4() { printf("L0\n"); if (fork() != 0) { printf("L1\n"); if (fork() != 0) { printf("L2\n"); } } printf("Bye\n");}

fork Example: Nested forks in children

void fork5() { printf("L0\n"); if (fork() == 0) { printf("L1\n"); if (fork() == 0) { printf("L2\n"); } } printf("Bye\n");}

Reaping Child Processes

- Idea

- When process terminates, it still consumes system resources (e.g., Exit status, various OS tables)

- Called a

zombie(Living corpse, half alive and half dead)

- Reaping

- Performed by parent on terminated child (using

waitorwaitpid) - Parent is given exit status information

- Kernel then deletes zombie child process

- Performed by parent on terminated child (using

- What if parent doesn’t reap ?

- If any parent terminates without reaping a child, then the orphaned child will be reaped by

initprocess (pid == 1) - So, only need explicit reaping in long-running processes (e.g., shells and servers)

- If any parent terminates without reaping a child, then the orphaned child will be reaped by

Zombie Example

void fork7() { if (fork() == 0) { /* Child */ printf("Terminating Child, PID = %d\n", getpid()); exit(0); } else { printf("Running Parent, PID = %d\n", getpid()); while (1) ; /* Infinite loop */ }}linux> ./forks 7 &[1] 6639Running Parent, PID = 6639Terminating Child, PID = 6640linux> psPID TTY TIME CMD6585 ttyp9 00:00:00 tcsh6639 ttyp9 00:00:03 forks6640 ttyp9 00:00:00 forks <defunct>6641 ttyp9 00:00:00 pslinux> kill 6639[1] Terminatedlinux> psPID TTY TIME CMD6585 ttyp9 00:00:00 tcsh6642 ttyp9 00:00:00 pspsshows child process as “defunct” (i.e., a zombie)- Killing parent allows child to be reaped by

init

Non-terminating Child Example

void fork8() { if (fork() == 0) { /* Child */ printf("Running Child, PID = %d\n", getpid()); while (1) ; /* Infinite loop */ } else { printf("Terminating Parent, PID = %d\n", getpid()); exit(0); }}linux> ./forks 8Terminating Parent, PID = 6675Running Child, PID = 6676linux> psPID TTY TIME CMD6585 ttyp9 00:00:00 tcsh6676 ttyp9 00:00:06 forks6677 ttyp9 00:00:00 pslinux> kill 6676linux> psPID TTY TIME CMD6585 ttyp9 00:00:00 tcsh6678 ttyp9 00:00:00 ps- Child process still active even though parent has terminated

- Must kill child explicitly, or else will keep running indefinitely

wait: Synchronizing with Children

- Parent reaps a child by calling the

waitfunction int wait(int *child_status)- Suspends current process until one of its children terminates

- Return value is the

pidof the child process that terminated - If

child_status != NULL, then the integer it points to will be set to a value that indicates reason the child terminated and the exit status:- Checked using macros defined in

wait.hWIFEXITED,WEXITSTATUS,WIFSIGNALED,WTERMSIG,WIFSTOPPED,WSTOPSIG,WIFCONTINUED

- Checked using macros defined in

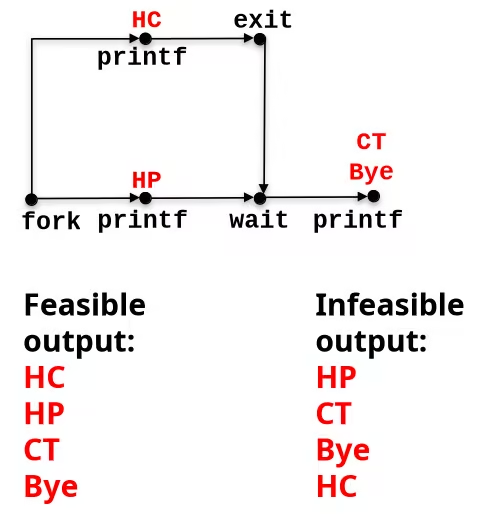

wait Example

void fork9() { int child_status;

if (fork() == 0) { printf("HC: hello from child\n"); exit(0); } else { printf("HP: hello from parent\n"); wait(&child_status); printf("CT: child has terminated\n"); } printf("Bye\n");}

Another wait Example

- If multiple children completed, will take in arbitrary order

- Can use macros

WIFEXITEDandWEXITSTATUSto get information about exit status

void fork10() { pid_t pid[N]; int i, child_status;

for (i = 0; i < N; i++) if ((pid[i] = fork()) == 0) exit(100+i); /* Child */ for (i = 0; i < N; i++) { /* Parent */ pid_t wpid = wait(&child_status); if (WIFEXITED(child_status)) printf("Child %d terminated with exit status %d\n", wpid, WEXITSTATUS(child_status)); else printf("Child %d terminate abnormally\n", wpid); }}waitpid: Waiting for a Specific Process

pid_t waitpid(pid_t pid, int &status, int options)- Suspends current process until specific process terminates

void fork11() { pid_t pid[N]; int i; int child_status;

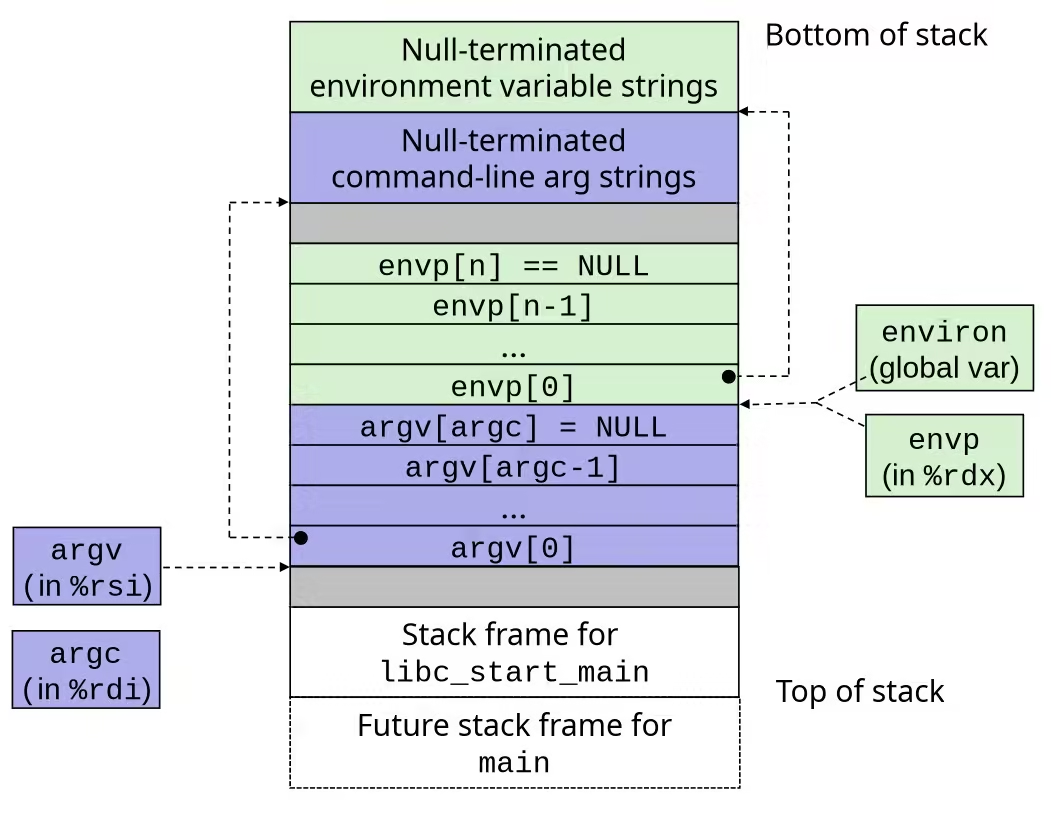

for (i = 0; i < N; i++) if ((pid[i] = fork()) == 0) exit(100+i); /* Child */ for (i = N-1; i >= 0; i--) { pid_t wpid = waitpid(pid[i], &child_status, 0); if (WIFEXITED(child_status)) printf("Child %d terminated with exit status %d\n", wpid, WEXITSTATUS(child_status)); else printf("Child %d terminate abnormally\n", wpid); }}execve: Loading and Running Programs

int execve(char *filename, char *argv[], char *envp[])- Loads and runs in the current process:

- Executable file

filename- Can be object file or script file beginning with

#!interpreter

- Can be object file or script file beginning with

- …with argument list

argv- By convention

argv[0] == filename

- By convention

- …and environment variable list

envpname=valuestrings (e.g.,USER=root)getenv,putenv,printenv

- Executable file

- Overwrites code, data, and stack

- Retains PID, open files and signal context

- Called once and never returns

- …except if there is an error

Structure of the stack when a new program starts

Signals

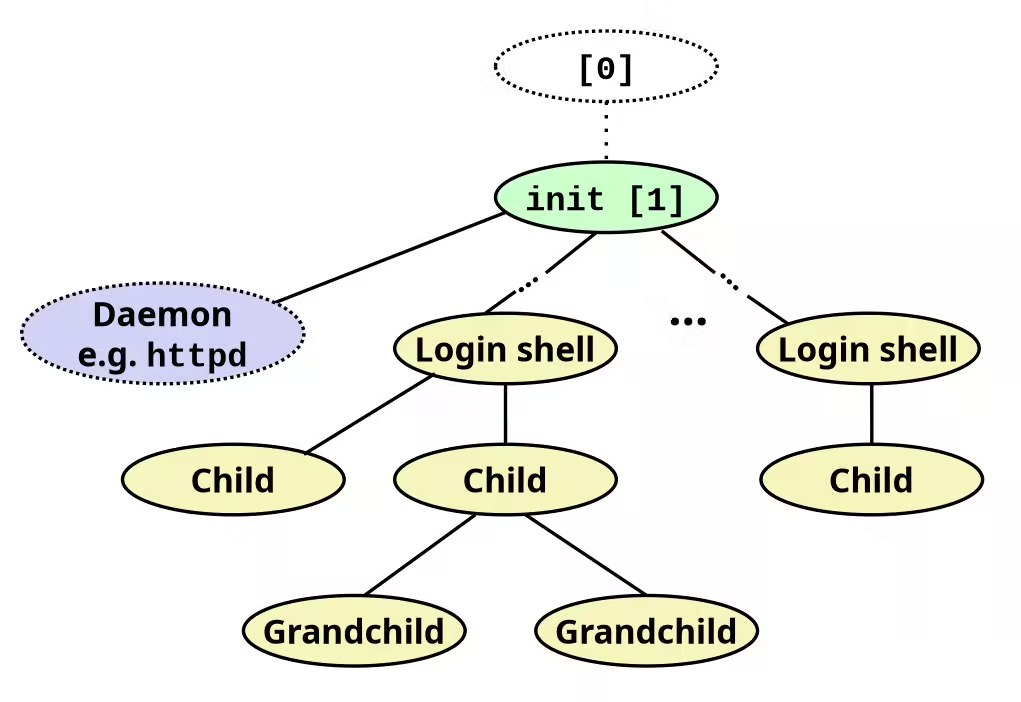

Linux Process Hierarchy

TIPYou can view the hierarchy using the

pstreecommand.

Shell Programs

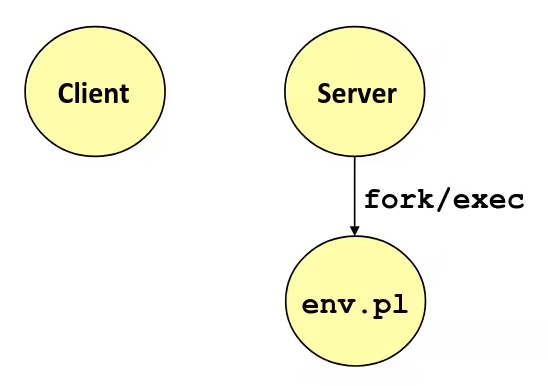

- A shell is an application program that runs programs on behalf of the user

sh: Original Unix shell (Stephen Bourne, AT&T Bell Labs, 1977)csh/tcsh: BSD Unix C shellbash: “Bourne-Again” Shell (default Linux shell)